HardVPL - Visible Penis Line

Details

Download Files

About this version

Model description

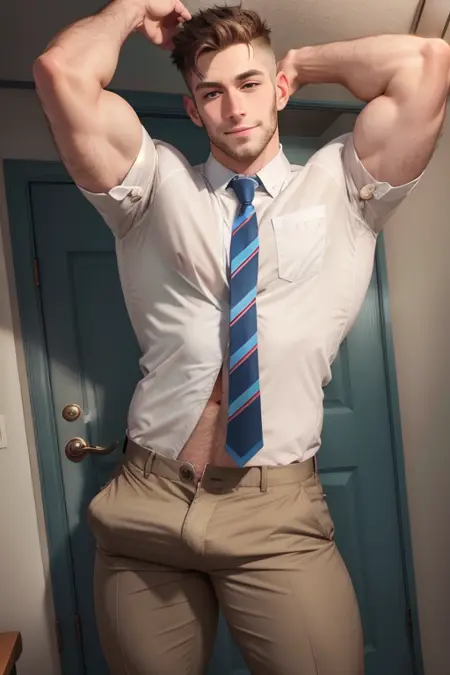

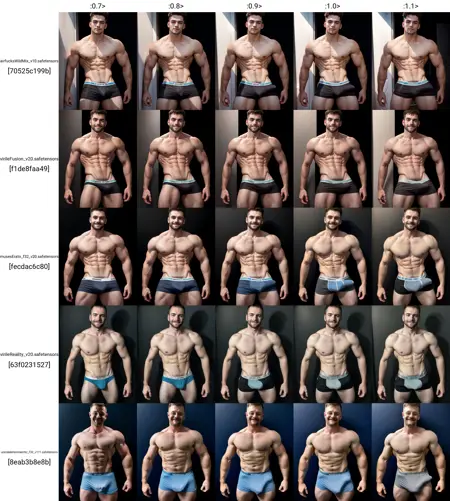

Gives dudes a big raging hard-on in their pants.

In the prompt, use the trigger word "vpl" and maybe what kind of pants/underwear the subject is wearing (so far underwear works best.) Throwing the word "bulge" in there may yield better results under certain conditions. It seems to do better with semi-realistic models. The weight is most effective around 0.7-1.0.

P.S. this is my very first LoRA, and I intend to release an improved version in the future, so stay tuned! If you've generated any good VPLs, please post or share as I would love to add it to the training data for v2.