REDRAW-BODY-BG-INSTANTID

세부 정보

파일 다운로드

모델 설명

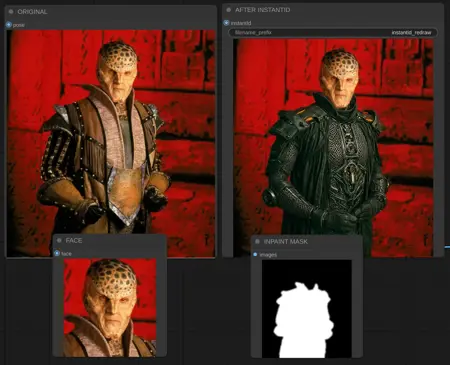

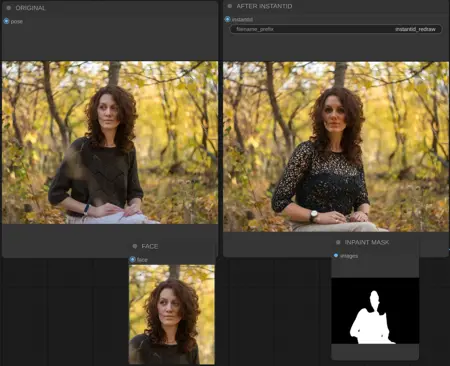

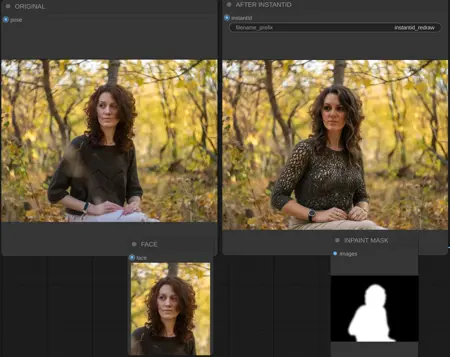

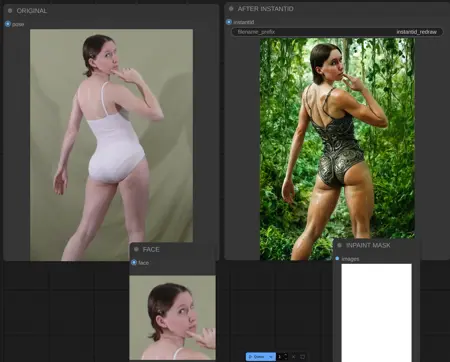

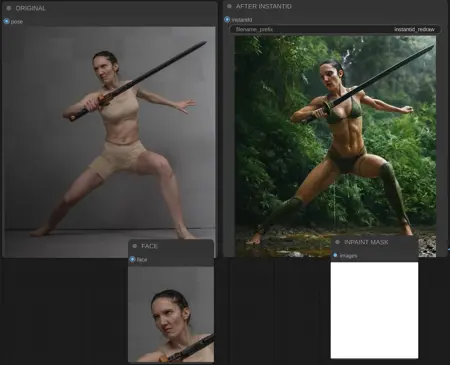

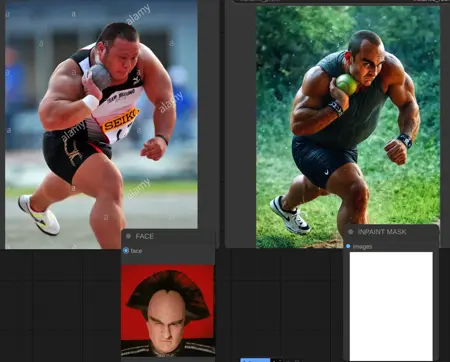

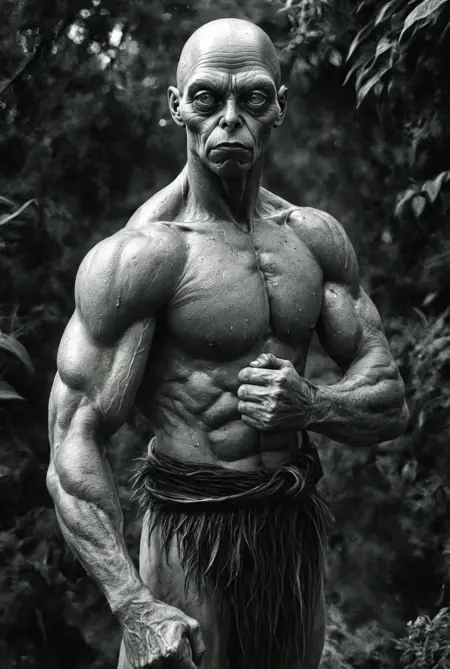

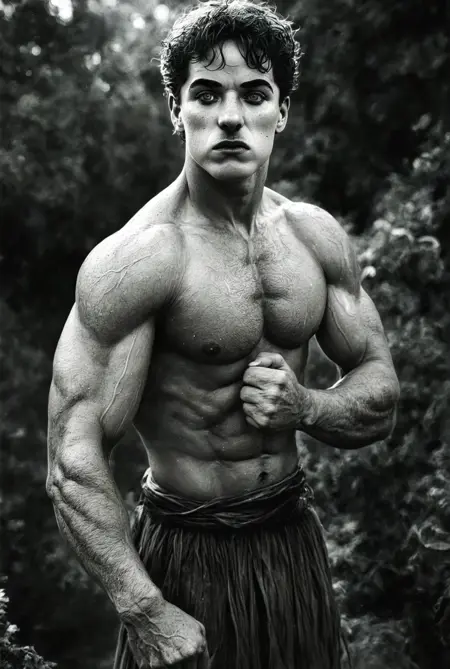

TL;DR: 원본 이미지에 대해 프롬프트에 따라 신체 및/또는 배경을 다시 그립니다.

도움말

저에게 골드 BUZZ를 공유해주거나, 이미지를 업로드하거나, 블루 BUZZ를 생산하는 다른 상호작용을 해주세요. 이는 CivitAI에서의 학습 비용을 지불하고 학습용 데이터셋을 생성하는 데 도움이 됩니다. 사전에 감사드립니다.

작동 방식

원본 이미지를 가져와 얼굴을 추출하고, 자동으로 inpaint 마스크를 생성하며, 얼굴 임베딩을 생성합니다. 선택한 노이즈 제거 강도에 따라 마스크 영역 내의 모든 요소를 재작성합니다.

얼굴, 배경, 머리카락, 신체, 옷 중 무엇을 그대로 유지할지 선택할 수 있습니다.

얼굴 임베딩 강도와 노이즈 제거 강도(원본 이미지를 얼마나 유지할지)를 조정할 수 있습니다. 얼굴을 inpaint 마스크에서 완전히 제거하면 InstantID 모듈이 적용되지 않습니다.

또한 모든 렌더링 작업에 대한 기본 해상도를 조정할 수 있습니다.

사용 방법

대부분의 경우, 파란색 "WD14 Caption" 노드와 녹색 "USER INPUT" 노드만 수정하면 됩니다. 원본 이미지를 입력하세요. WD14 캡셔너는 Pony 스타일 태그를 생성합니다. 이 캡션을 "WD14 Caption"에 입력한 후, 최종 결과에서 원하지 않는 요소의 태그를 제거하세요. "USER INPUT"에는 원본 이미지에 없는 요소나 스타일링 프롬프트를 추가하는 고정 프롬프트를 입력하세요.

WD14 태거 노드의 "제외할 태그"를 수정하여 프로세스를 간소화하세요. 제외할 모든 태그를 추가한 후, WD14 태거를 "Join String List > arg2"에 연결하고 더 이상 수정할 필요가 없습니다.

첫 번째 실행 후 WD14 Caption을 처음 수정한 이후에는 그대로 두고 "USER INPUT"만 수정할 수 있습니다. 또는 WD14 Caption을 더 조정할 수도 있습니다. WD14 Caption은 프롬프트의 보조 부분이므로, 스타일링은 여기에 두는 것이 "USER INPUT"보다 더 나을 수 있습니다.

InstantID를 사용하여 얼굴을 재작성하기로 결정한 경우, 원본과 100% 동일하지 않은 얼굴이 생성될 수 있습니다. 이 경우 원본 이미지를 첫 번째 결과로 교체하세요(오른쪽 클릭 → 복사(Clipspace) → 붙여넣기(Clipspace) on 원본 이미지 노드). 그런 다음 만족하는 요소를 유지하기 위해 마스크를 조정하세요. 또한 KSampler의 노이즈 제거 강도와 Apply InstantID의 가중치를 조정해보세요.

배경을 다시 그릴 경우, "Grow Mask With Blur"의 "fill_holes" 기능이 꺼져 있는지 확인하세요.

사용 사례?

복장 / 신체 / 배경 수정. 흐릿하거나 저화질 이미지를 고화질로 변환.

Video=2-Video: InstantID를 기반으로 캐릭터 일관성을 유지하며 프레임마다 스타일리시한 재작성.

한 캐릭터의 신체와 다른 캐릭터의 얼굴을 혼합.

설치 및 문제 해결 방법

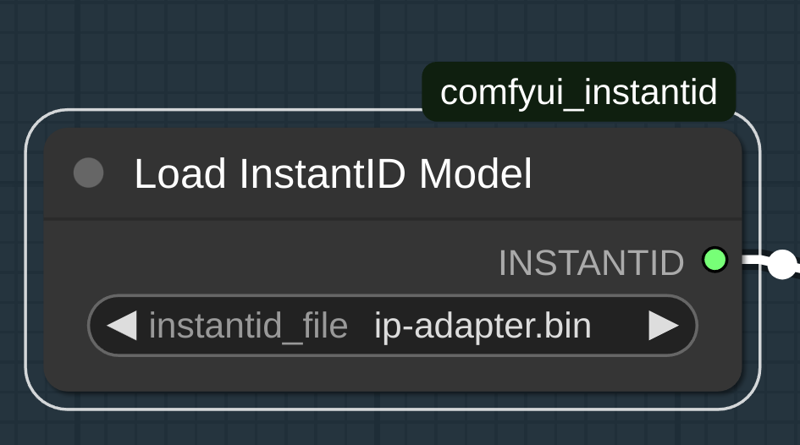

가장 복잡한 부분은 두 개의 InstantID 패키지를 설치하는 것입니다:

https://github.com/cubiq/ComfyUI_InstantID

https://github.com/nosiu/comfyui-instantId-faceswap

또한 두 개의 모델을 설치해야 합니다:

ip-adapter.bin – SDXL 전용 특수 IPAdapter 모델입니다(표준 IPAdapter FaceId 모델과 혼동하지 마세요).

diffusion_pytorch_model.safetensors – InstantID IPAdapter 전용 특수 ControlNet 모델입니다(IPAdapter FaceID용 ControlNet 모델과 혼동하지 마세요).

diffusion_pytorch_model.safetensors – InstantID IPAdapter 전용 특수 ControlNet 모델입니다(IPAdapter FaceID용 ControlNet 모델과 혼동하지 마세요).

위에 언급된 두 모델은 모두 ComfyUI 내의 Manager를 통해 다운로드할 수 있습니다.

기타 커스텀 노드는 특별한 단계 없이 일반적인 방식으로 설치할 수 있습니다.