Aozora-XL Vpred

詳細

ファイルをダウンロード

このバージョンについて

モデル説明

Aozora-XL: V-Prediction SDXLモデル

Aozora-XLは、NoobAI v-predを基にしたv-predictionモデルで、安定性と一貫性を向上させるようファインチューニングされています。このモデルは、RTX 3060などの12GB消費級GPU上で完全または部分的なファインチューニングを可能にするカスタムトレーニングスクリプトを使用しています。トレーニングスクリプトは、コミュニティ利用のためにGitHubで公開されています:Aozora_SDXL_Training。

一度もマージされていません

内部でマージされたLoRAは一切使用していません

バージョン0.15の更新点

このバージョンは、v-prediction設定における特定の問題を解決するためにv0.1を基に構築されています。以前のリリースに見られたわずかな白濁効果を軽減し、鮮やかな色合いを回復させるために、v0.1ベースでトレーニングされました。さらに、シーン構成や細部の描画における一般的なv-predictionの問題を修正するための追加ファインチューニングを実施しました。本モデルは、深みのある色合いを持つビジュアルノベルおよびアニメコンテンツの約50,000枚の画像を用い、5エポックでトレーニングされています。設定は以下の通りです:

- ベースモデル:Aozora V0.1

- 最大トレーニングステップ:250,000

- 勾配蓄積ステップ:64

- 混合精度:bfloat16

- UNET学習率:8e-07

- 学習率スケジューラ:10%ウォームアップ付きCosine

- 特徴:Min-SNR Gamma(修正版、gamma 5.0)、Zero Terminal SNR、IPノイズGamma(0.1)、Residual Shifting、条件付きドロップアウト(確率0.1)

これらの改善により、さまざまなプロンプトに対する色再現性と出力の信頼性が向上しました。

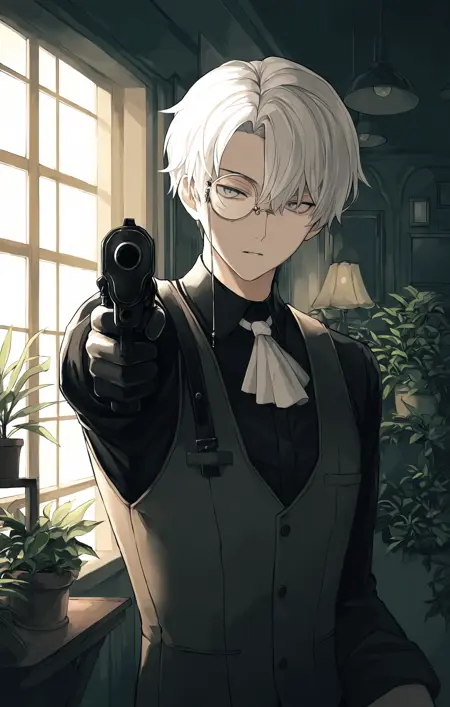

- 注:すべてのプレビュー画像は、詳細強化ツールやエンハンサーを使用せずに生成されています。ベース機能を示すためです。

バージョン0.1の概要

最初のリリース(v0.1 alpha)は、約18,500枚の画像(50%はZZZキャラクター(バージョン2.0まで)、50%は高評価のDanbooru画像)を用いて10エポックトレーニングされた概念実証モデルです。ベースモデル(NoobAI-XL/NAI-XL V-Pred 1.0)の特徴を維持しつつ、トレーニング手法による安定性の向上を示しています。

プロジェクトの目標

- 消費者向けハードウェア上でSDXLのファインチューニングを可能にするGUIベースのトレーニングスクリプトを提供する。

- 多様なデータセットを用いた継続的なトレーニングを通じて、Aozora-XLを安定的かつ制御可能なモデルへと発展させる。

トレーニング手法

この手法は、UNetの約92%をトレーニングすることで効率を最適化しています。v-predictionの安定性を確保するために、アダプティブなMin-SNRガンマ重み付けとカスタム学習率スケジュールを導入しています。

トレーニング仕様:

- ハードウェア:NVIDIA RTX 3060 1台(VRAM使用量:約11.8GB)

- 最適化アルゴリズム:Adafactor

- バッチサイズ:1(勾配蓄積ステップ:64)

- UNetトレーニングパラメータ数:23億

推奨設定

- ポジティブプロンプト:very awa, masterpiece, best quality

- ネガティブプロンプト:任意;必要に応じて(worst quality, low quality)を試す

- サンプラー:DPM++ 3M SDE GPU または Euler(線画にはEuler、手や足などの細部にはSDE)

- スケジューラ:SGM Uniform または Normal

- ステップ数:25–35

- CFGスケール:3–5(低値でも安定して動作)

- 解像度:1024x1024 または 類似(最大1152x1152まで)

- Hires. Fix:RealESRGENなどのアップスケーラーを用い、約0.35のノイズ除去で実行

システムによってv-predictionモデルの挙動は異なるため、設定を試行錯誤してください。

ライセンス

このモデルは、そのベースモデルであるNoobAI-XLのライセンスに従います。該当する条件を確認し、遵守してください。