LoSci Anime 🈂️ by Huggy

Details

Download Files

About this version

Model description

💡 Search "huggy" to find all my models on site.

💡 Models are updated frequently, so be sure to check back and use the latest version.

📒Since painterly works so well in this mixed-style lora, I’m lining up a dedicated painterly version next.

📒 Instructions for Version 2.1 (This's my best trained lora out of 83 models):

Blending three styles into one lora gives smoother results than stacking three separate loras, but it’s tough to keep things balanced.

lofi_anime (easy, overfits): drop lora weight to ~0.7 with the trigger word.

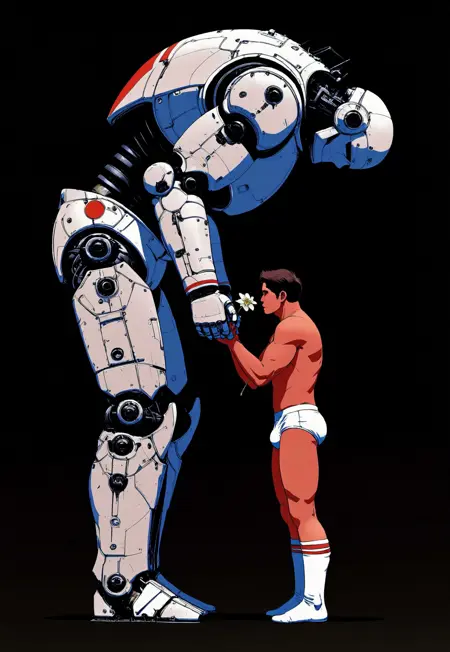

scifi_anime (complex scenes, underfits): push weight above 0.9.

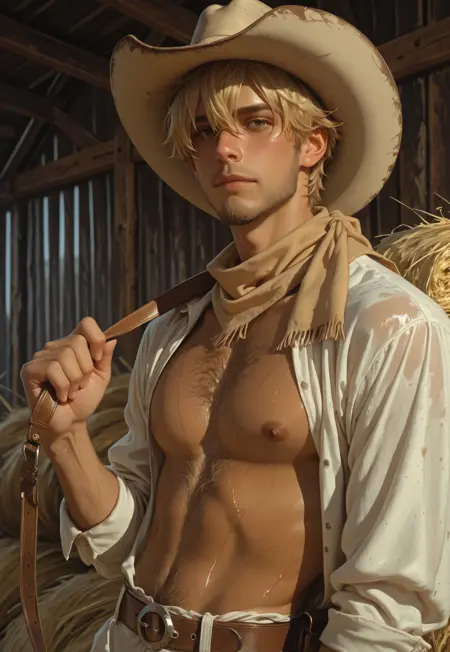

painterly (semi-realistic with character detail): use weight above 0.8.

📒 Training Notes for Version 2.1 (cost 3,633 buzz and took 8 hours for training)

1. Overfitting (V1) vs. Underfitting (V2):

V1 overfit because it saw a small dataset (54 images) too many times (26 repeats), causing it to memorize images instead of learning the style. This made it "opinionated" and required lower weights. V2 underfit because it saw a large, diverse dataset (215 images) too few times (5 repeats) with a very low networkAlpha, which was insufficient exposure and strength to learn complex details.

2. Balanced Learning Strength (networkAlpha):

To fix V2's weakness, networkAlpha will be increased from 4 to 12. This is a strategic compromise: it's strong enough to capture the difficult scifi_anime details (unlike V2's alpha of 4), but less aggressive than V1's alpha of 16. The goal is a powerful lora that remains flexible and is more compatible with other models near a weight of 1.0.

3. Disabling Flip Augmentation:

flipAugmentation is being turned off. V2 training proved it corrupts asymmetrical concepts (pianos, text, unique vehicles) by teaching the model incorrect "mirrored" versions, leading to flawed generations.

4. V2's Superior Generalization:

V2's use of three distinct trigger words (lofi_anime, scifi_anime, painterly) was a major success. The triggers acted as labels, allowing the model to cleanly separate and learn three styles. V1, with no triggers, merged everything into a single, less flexible aesthetic.

5. Strategy: High Repeats x Low Epochs:

To combat underfitting within Civitai's 10,000 step limit, V2.1 will use higher repeats (10) and fewer epochs (9). This forces the model to "cram" the details of each image within a single epoch, which is critical for learning three different styles at once.

6. Captioning Overhaul:

All captions have been rewritten with greater detail for V2.1. The simple, repetitive captions used in V2 on a large dataset caused "concept bleed" and made specific image details difficult to recall. Unique and descriptive captions create stronger, more precise text-to-image associations, enabling better control during generation.

📒 Version 2.1 — Caption Typos:

"light background. one knee up"

winy -> windy

indow -> window

+ stars

+ blue sky

📒 Version 2 — LoSci Anime

Trained with 4× the resource quantity compared to the initial lofi-anime version.

Training pushed to the upper limit — nearly 10,000 steps (cost 3,600 buzz on Civitai).

This version can now generate three distinct anime styles using three unique trigger words. They can be used individually or combined for hybrid results.

lofi_anime

scifi_anime

painterly

📒 Version 1 :

- Bring mixed vintage and sci-fi vibes to your works.

⚠️ Version 1 requests at least one of following trigger words to active this lora:

futuristic

indoors

cluttered room

cityscape

city lights

bay

electronic devices

window

potted plant

sunset

hillside

rooftop edge

📒 Cost Breakdown for V1 Lora:

2,300 Buzz – Training

1,500 Buzz – Testing

Total: 3,800 Buzz

📒 To be fixed in V2 update:

fix caption typos: blacck, gfuturistic, sunset / full moon / palm trees potted plants

fix sketchy background

🎉 This lora is part of my goal to create 100 lora.

📒 Mirroring

I’ve noticed a few sites, like Modelslab and yodayo, using bots to mirror my models. SeaArt in particular shows big numbers for downloads and generations.

I don’t mind if you mirror my models — sites like civitaiarchive have always done that.

I do mind if you block users from downloading the models and force them onto a paid online service.

I do mind if you claim copyright or give users ownership of the generations. These are open-source models, trained on open-source bases with limited-ownership data. No one — not even me — should claim copyright. Credit is all that’s needed.