Z-Image Turbo - Quantized for low VRAM

Details

Download Files

About this version

Model description

Z-Image Turbo is a distilled version of Z-Image, a 6B image model based on the Lumina architecture, developed by the Tongyi Lab team at Alibaba Group. Source: https://huggingface.co/Tongyi-MAI/Z-Image-Turbo

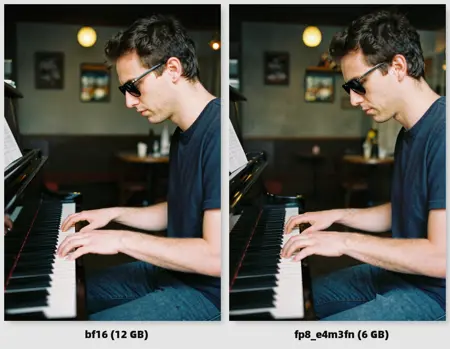

I've uploaded quantized versions from bf16 to fp8, meaning the weights had their precision - and consequently their size - halved for a substantial performance boost while keeping most of the quality. Inference time should be similar to regular "undistilled" SDXL, with better prompt adherence and resolution/details. Ideal for weak(er) PCs.

Features

Lightweight: the Turbo version was trained at low steps (5-15), and the fp8 quantization is roughly 6 GB in size, making it accessible even to low-end GPUs.

Uncensored: many concepts censored by other models (

Flux ) are doable out of the box. Good prompt adherence: comparable to Flux.1 Dev's, thanks to its powerful text encoder Qwen 3 4B.

Text rendering: comparable to Flux.1 Dev's, some say it's even better despite being 2x smaller (probably not as good as Qwen Image's though).

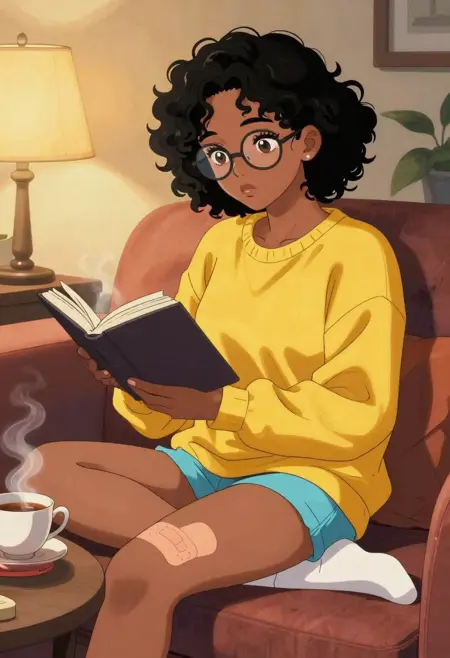

Style flexibility: capable of generating photorealistic images, as well as anime, painting, pixel art, low poly, comics, illustration, pop art, etc.

High resolution: capable of generating up to 2MP resolution natively (before upscaling!).

Dependencies

Download Qwen 3 4B to your

text_encodersdirectory: https://huggingface.co/Comfy-Org/z_image_turbo/blob/main/split_files/text_encoders/qwen_3_4b.safetensorsDownload Flux VAE to your

vaedirectory: https://huggingface.co/Comfy-Org/z_image_turbo/blob/main/split_files/vae/ae.safetensors

Instructions

Workflow and metadata are available in the showcase images.

Steps: 5 - 15.

CFG: 1.0. This will ignore negative prompts, so no need for them.

Sampler/scheduler: depends on the art style. Here are my findings so far:

Photorealistic:

Favourite combination for the base image:

euler+beta,simpleorbong_tangent(from RES4LYF) - fast and good even at low (5) steps.Most multistep samplers (e.g.:

res_2s,res_2m,dpmpp_2m_sdeetc) are great, but some will be 40% slower at same steps. They might require a scheduler likesgm_uniform.Almost any sampler will work fine -

sa_solver,seeds_2,er_sde,gradient_estimation.What you probably want to avoid (at least in the base image) due to bad results or poor performance:

dpm_adaptivesamplerkarrasscheduler

Some samplers and schedulers add too much texture, you can adjust it by increasing the shift (e.g.: set shift 7 in ComfyUI's

ModelSamplingAuraFlownode).

Illustrations (e.g.: anime):

res_2morrk_betaproduce sharper and more colourful results.

Others:

- I'm still testing. Use

euler+simplejust to be safe for now.

- I'm still testing. Use

Resolution: up to 2MP native. When in doubt, use same as SDXL, Flux.1, Qwen Image, etc (it works even as low as 512px like SD 1.5 times). Some examples:

896x1152

1024x1024

1216x832

1440x1440

1024x1536

Upscale and/or detailers are recommended to fix smaller details like eyes, teeth, hair. See my workflow embedded in the main cover image.

Instead of using a sampler with low step like we do with other models, Z-Image works best when you use something like

KSampler (Advanced)(in ComfyUI), or any node that allows you to set a starting step.set shift to 7 while using something like

euler+simple(some samplers/schedulers might have their own shift, which won't help), this will prevent exaggerated textures and noise.

Prompting: officially they say long and detailed prompts in natural language works best, but I tested with comma-separated keywords/tags, JSON, whatever... either should work fine. Keep it in English or Mandarin for more accurate results.

FAQ

Is the model uncensored?

- Yes, it might just not be well trained on the specific concept you're after. Try it yourself.

Why do I get too much texture after upscaling?

- See instructions about upscaling above.

Does it run on my PC?

If you can run SDXL, chances are you can run Z-Image Turbo fp8. If not, might be a good time to purchase more RAM or VRAM.

All my images were generated on a laptop with 32GB RAM, RTX3080 Mobile 8GB VRAM.

I'm getting an error on ComfyUI, how to fix it?

- Make sure your ComfyUI has been updated to the latest version. Otherwise, feel free to post a comment with the error message so the community can help.

Is the license permissive?

- It's Apache 2.0, so quite permissive.