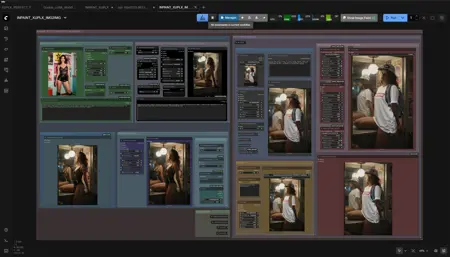

XUPLX_INPAINT_IMG2IMG-REFINEMENT_UPSCALE

详情

下载文件

模型描述

README: XUPLX ZIMAGE MERGE + INPAINT + IMG2IMG + UPSCALE WORKFLOW

1) CORE MODEL STACK + TARGET RESOLUTION:

Base UNet checkpoint: z_image_turbo_bf16.safetensors

CLIP: qwen_3_4b.safetensors with lumina2

VAE: ae.safetensors

Main latent canvas is 1024×1536 (SD3 latent node), so this workflow is set up to generate portrait-format images at that size by default.

2) THE "TWO PROMPTS, ONE SEED" STRATEGY:

Workflow generates MOOD early, then NATURAL LANGUAGE late, and you can tune the “blend” between them.

Mechanically, that’s implemented as:

A single fixed seed shared across the samplers (seed = 01234567890).

A single global STEPS integer (set to 20) feeding multiple samplers.

A shared END_AT/START_AT integer (set to 5), so stage 1 runs 0→5 steps and stage 2 runs 5→20 steps.

The “blend knob” is ModelSamplingAuraFlow set to 5 in the branch that continues the latent (the README refers to this as “somewhere-in-the-middle” tuning).

MOOD and NATURAL LANGUAGE prompts are centralized as Mixlab TextInput_ nodes (“MOOD” and “NATURAL LANGUAGE”), which drive the CLIPTextEncode nodes via links.

3) THE LoRA'S ROLE (AND WHERE IT DOES/DOESN'T APPLY):

Load an optional LoRA (XUPLX_UglyPeopleLoRA.safetensors); strength is set to 0.4 / 0.4, switch On by default.

The LoRA-modified model is used in the “MOOD” portion of the workflow, while other parts (notably the inpaint branch) are using the base model path. The XUPLX LoRA is optional and trained on real photographs.

Download XUPLX_UglyPeopleLora Here:

/model/2220894/xuplxuglypeoplelora

4) INPAINTING STAGE (MASK FROM CLIPSPACE):

After generation, you do a classic inpaint pass:

Load your merged image (simply copy and paste into "Load Image" node).

Inpaint prompt example in the workflow: “The woman is wearing a black colored backpack.”

5) IMAGE TO IMAGE STAGE:

Img2Img is meant to synthesize the lighting, ambiance, and subject from the merged image with the inpainted material. Denoise is set to 0.45 by default, but varies from one image to another.

6) FINALLY, RUN A SEEDVR2 UPSCALER ON THE IMG2IMG OUTPUT:

It’s configured to output 2048×3072 and "lab" color mode by default.

The VAE for SeedVR2 is set to ema_vae_fp16.safetensors. It is strongly suggested to use GGUF models for upscaling. You may see an OOM error if VRAM purge fails. Simply run the upscale again.

5) TOOLING / CUSTOM NODE PACKS YOU DEPEND ON:

This workflow expects several custom packs/plugins to be installed, including

Mixlab nodes (TextInput_)

Comfyroll (CR Load LoRA)

RvTools v2 (Integer nodes for STEPS and END_AT/START_AT)

rgthree (Image Comparer)

WAS Node Suite (Seed node)

SeedVR2 upscaler pack

The workflow comes with a README, or you can check out the article I wrote:

How to Merge Image Styles Quickly with XUPLX and ZImage Turbo

https://civitai.com/articles/23657/how-to-merge-image-styles-quickly-with-xuplx-and-zimage-turbo

You can download a simplified version of my workflow here:

/model/2221102/xuplx-merge-workflow

IF YOU LOVED THIS...DONT BUY ME A COFFEE!!

Download "ExportGPT - Media & Chat Export Toolkit" in the Chrome Web Store. If you live in ChatGPT and Sora, it's an indispensable tool to move your assets around quickly without external servers. IT HAS A FIVE STAR RATING AND IT'S FREE FOREVER!

See it in action: exportgpt.org