ComfyUI Local LLM Qwen Prompt Refiner (Offline LLM Node)

Details

Download Files

About this version

Model description

Local Qwen LLM loader & thinking prompt refiner for ComfyUI

Load any Qwen model (like Qwen3-4B-Thinking-2507) completely offline using safetensors. Perfect for refining Stable Diffusion prompts with full control: customizable instructions, visible chain-of-thought output, fixed seed for consistent results, and optional full memory unloading to free VRAM after use.

No API, no rate limits, no OOM headaches when running alongside heavy generation workflows.

Just drop the model files in models/qwen/model-main-folder-goes-here/repo-files-goes-here, restart ComfyUI, and start crafting better prompts locally.

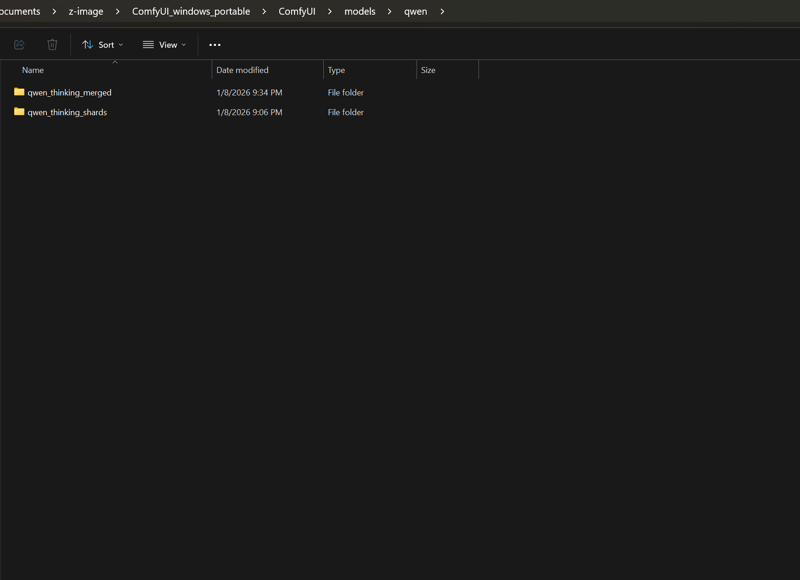

location to place the model:

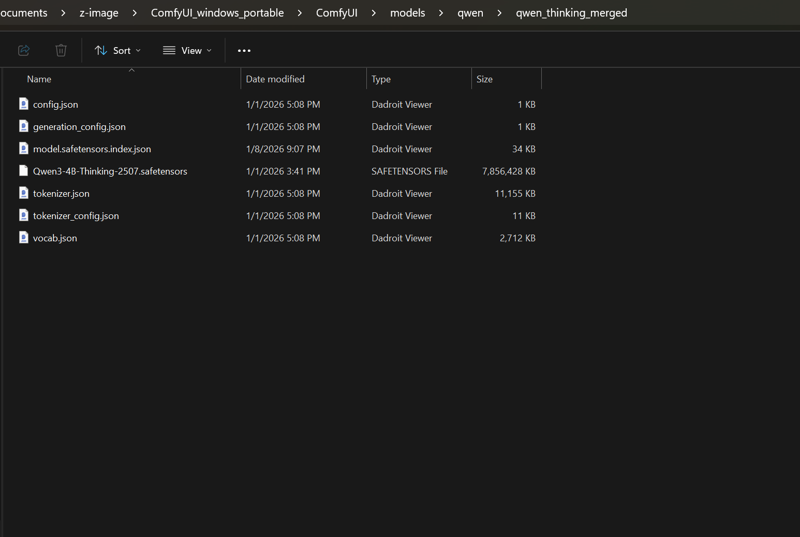

what goes inside of the subfolders:

Custom-node repo is here