Not so simple (or is it?) Illustrious Workflow

Details

Download Files

About this version

Model description

DISCLAIMER: Please be aware my workflows are not a one-size-fits-all solution for all comfyUI users. They were designed for my personal use (for me by me), but I was asked to share it.

Before you download: This workflow is not meant for fast generation. If you want speed, then this workflow is not what you want. If you're looking for a basic workflow, the comfyUI devs provided a bunch here. There's also other (possibly better) workflows here on civitai as well.

v13a-Epsilon and newer requires your ComfyUI to be on version v0.3.64 or newer. Upgrading your comfy install comes with its own risks and I am not responsible if your comfy install breaks. Do so at your own peril.

I want to reiterate: the detailer nodes will have an error if you do not update Impact Pack after updating ComfyUI to v0.3.64

Updating comfyui, installing custom nodes, etc., can break your comfy install. Do these at your own risk. I am not responsible for your install breaking.

If you have RES4LYF installed, almost all of my workflows will not work for you. (v14 does work with RES4LYF)

I have had at least one person per version with the Sampler Scheduler Settings (JPS) and BasicScheduler nodes run into the same errors each time. The common factor between them was having RES4LYF installed. This was an issue I was able to recreate as well when installing RES4LYF.

What does this workflow do?

The Simple workflows are very basic:

Supports up to 3 LoRAs

Normal image generation via KSampler

Performs upscaling via Ultimate SD Upscale

Saves image to your default output folder

The v

Allow you to use as many LoRAs as you want (theoretically)

Watermark detection and removal (not on v12)

Upscaling via USDU

Detailing for faces, etc.

Color/contrast adjustment

Other various settings that affect the output (not on v12)

Save image with full metadata that civitai can read

I feel like this goes without saying, but you will need to adjust the settings to fit your preferences.

Commonly asked questions/issues:

The workflow is stopping abruptly right before or after the USDU nodes.

If you are seeing errors where the scheduler input on the BasicScheduler and USDU are red, then you probably have some sort of custom sampler or scheduler related to the issue and it's not playing nice somewhere.

Solution/workaround:

Usually, the fix is deleting the Sampler Scheduler settings node.

OR uninstall the custom samplers/schedulers

Another possible reason would be that you don't have SAM and/or detection models selected/installed for the Detailer groups (this includes the Watermark removal group). The models displayed initially are going to be based off my install. You have to select the model installed on your machine.

Note: The SAM loader is right below the Watermark removal group or to the left of it depending on what version you are using.

Another issue involves the Impact Scheduler Adapter nodes. (These are not present on v12 or v11k.)

Solution/workaround:

Delete the Impact Scheduler Adapter node in the Watermark Removal group and in each Detailer group.

Disconnect the noodles going into the scheduler on the related detailer nodes and set the scheduler manually.

If none of those worked, then unfortunately you're on your own to do troubleshooting or choose to not use my workflows.

Why does the image become washed out after the initial image is generated?

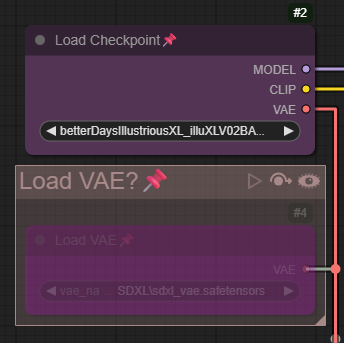

You model/checkpoint probably does not have a baked-in VAE. Toggle on the Load VAE group and make sure to select your VAE model. Usually, this is sdxl_vae and should be in your models/vae folder.

The Load VAE is in its own group and is enabled by default. Bypassing will switch to the baked-in VAE. If your model does not have a baked-in VAE, the images will be washed out after the initial image generation.

The reason why you don't see the effect until after is because the VAE is not used until after that point.

Why do I use Ultimate SD Upscale instead of a KSampler or Iterative Upscale?

- USDU is what works best for me. It's just my preference and (for me) performs better than KSampler and Iterative upscaling.

How do I use LoRAs with this workflow (before v11L)?

On the Positive Prompt node lower half you will see an option that says "Select to add LoRA". Click on that and then find your LoRA and click on it. It will be added to the prompt like A1111/Forge does.

Alternatively, you can start typing <lora: and then manually type out the lora name.

After that add the strength. e.g. lora:supercoolamazinglora:0.7

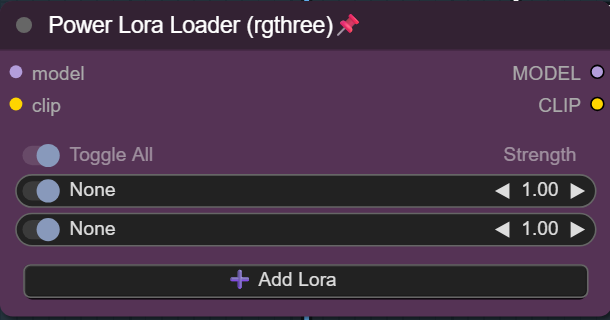

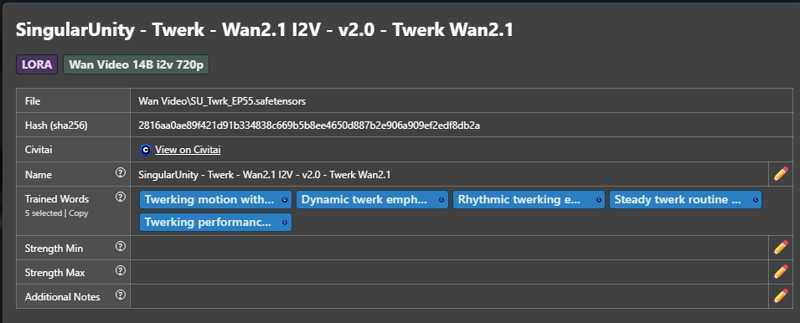

Note: on newer versions of the workflow I am using Power Lora Loader from rgthree.

- Using loras is easy with this node. Just click on Add Lora, pick your lora, and set the strength.

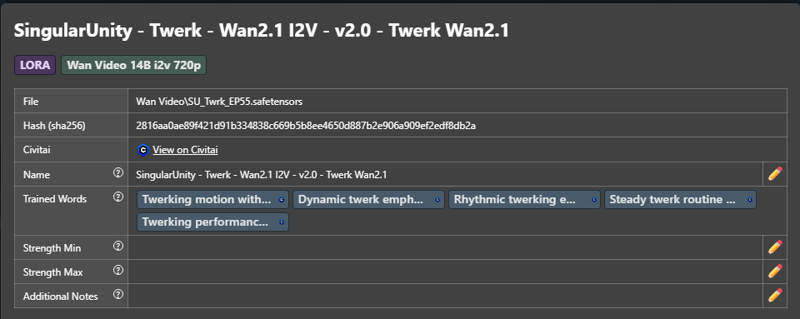

You can also pull up the info from civitai (as long as it exists) and get the trigger words (if any).

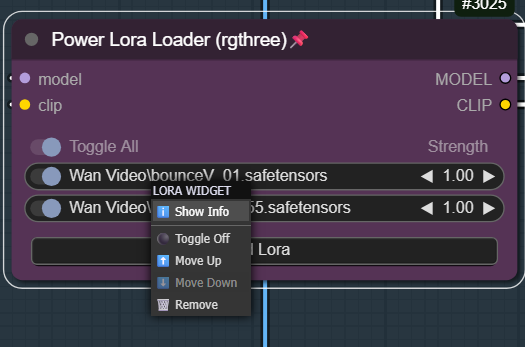

Right click on the lora name on the node and select "Show Info".

Then click on "Fetch info from civitai".

Then click on "Fetch info from civitai". Where it shows "trained words", you can click on the words and it will give you an option to copy them.

Where it shows "trained words", you can click on the words and it will give you an option to copy them.

Click where it says copy and then go to your positive prompt and paste them there.

Click where it says copy and then go to your positive prompt and paste them there.The best part: this function is free.

I will never (intentionally) incorporate nodes that have features you have to pay for in my workflow.

Upscale Model:

You should be able to use whatever upscale model you like best, but I know some of them have issues with USDU. So if your images look normal initially but you start seeing some craziness like weird color changes after USDU, it might be your upscale model. Try a different one and compare.

FaceDetailer Models:

If I recall, Impact Pack includes the needed models to get you started, but if you want something else or it does not include them, you can find more by using ComfyUI Manager's "Model Manager" option. The two types of models needed will be "Ultralytics" and "sam".

The face model I use is no longer on civitai. Looks like the person got banned (last I checked). They have it on huggingface. I use the 1024 v2 y8n version. This specific face detection model still works the best IMO.

VAE Model:

I usually use the normal SDXL VAE or whatever is baked into the checkpoint models.

Asking for help:

I'm just someone who uses ComfyUI and I am not a developer. If you have technical questions, I probably can't help you. I make heavy use of Google when I don't know why something breaks or want to know what something does. However, I will try to help you within the best of my abilities for any non-technical questions.

In the cases where you need/want help: please do not be vague

Provide links to screenshots (if possible).

Don't be a jerk. (I am not obligated to help you. This workflow is intended for my personal use and I am sharing it freely).

Will I add to the workflow?

Probably not, because chances are I either won't ever use it OR I have tried it and hated it.

Chances are if you are asking me this, you have worked on your own workflows or have used other workflows.

If there's something you like from another workflow or there is something you like from mine, try copy/pasting whatever it is into the workflow you prefer and experiment. It's a great way to learn.

Custom Nodes used on v14:

If you disconnect any of the noodles or bypass something you should not have, then something will probably break and result in errors. Try loading the original workflow JSON or drop your last successfully generated image if you run into this and don't want to troubleshoot.

Feel free to remove and add to the workflow to fit what you want. If my workflow helped you in any way, then great.

Settings will need to be adjusted to fit your preferences unless you are trying to generate images like mine. The default settings are not meant for speed.

This has been tested on the models mentioned in my "Suggested Resources" below. YMMV. Try playing with the settings/prompts to find your happy place. Current settings are to my tastes. Adjust to your tastes/preferences accordingly!

CFGZeroStar

Without:

With CFGZeroStar:

IMO the image output while using CFGZeroStar is better.

It seems to be more coherent where the corner is, how the window looks, no random light behind her butt, and the furniture in the background.

Her right leg is more smooth and more sparkles on the leggings.

From what I have read, it is not compatible with all models, so it will throw an error in those cases. I'll probably add this to the workflow and have it in a group to make it easy to toggle on/off.

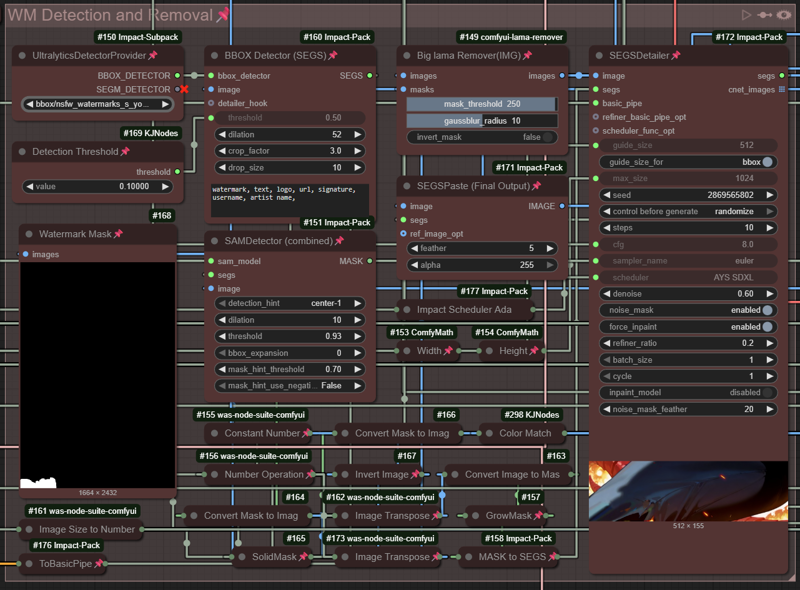

Watermark Removal

Why do I have this in the workflow?

- While rare, they do still happen and I don't like having to give up on a good image because of a watermark ruining it for me.

Watermark Removal in action:

Altering any of the settings in the Watermark portion of the workflow will probably break the watermark removal. All that should be changed there is:

Detection Threshold (higher = less detection, lower = more aggressive detection)

Watermark Detection Model (use whichever one you prefer)

Text in the BBOX Detection node.

Steps, scheduler, denoise on the Watermark Remover node can be adjusted.

on v10a I have had to drop the denoise down to 0.01 in some cases.

gaussblur radius can be adjusted up or down on the Big Iama Remover(IMG) node.

Anything else, I do not recommend messing with in the watermark portion of the workflow. I didn’t come up with it and cannot advise you on what all the buttons, numbers, and settings will do. Change them at your own risk.