LORA - Consistency Simulacrum v2.3 - Flux1D [SFW/NSFW]

Details

Download Files

About this version

Model description

Version 3 was good, but I still need to figure out how to make a proper lora 10/25/2024 5:29 am gmt -7;

I'm likely going to need to refresh the simulacrum lora to an earlier state after the next stage of finetuning, which will destroy a lot of the base flux context. It's not something I want to do, but it's kind of required because the bodies have begun to not comply to the core, so there's a few options I'm going to run to see if it fixes it; namely giving the actual bodies and poses themselves a finetune run with a lower learn rate to refresh their capability.

For temporary version 3 enhancements; try running the simulacrum v2.1 epoch 1 lora with the current checkpoint v3 1d or 1d-dd, and you'll find a superior physical body quality, character context, with outfit quality and control, at the cost of a lot of the grand-scheme surrounding FLUX world context and styling.

I'm working out fixes until the inevitable full finetune training inevitably begins, which then will result with lora difference extraction.

Incoming the Version 3 The Raid Boss Model 10/21/2024 5:12 pm gmt -7;

I still can't believe it's this good on step 3 of 8. It's going to be something else when it's fully cooked.

I'm switching core models from Flux1D to Flux DeDistilled for inference, due to the responsiveness to Simulacrum and the core systems that function so well with the trainings from my Flux1D2 lora merged checkpoint that I am using to train loras currently.

I'm training using Flux1D2 as the base training model, and I've merged Simulacrum v23 into the UNET and the base CLIP_L used by flux. The combination allows for rapid lora creations for Simulacrum, which means I can simply merge them together at any point and then merge them into Flux1D DeDistilled at any point.

Switching from Flux1D to Flux1D DeDistilled essentially allows me to skip the rest of Version 2.

I will release:

Flux1D2 Merged with the standalone CLIP_L for:

Simulacrum V23 epoch 10-> the current working training version

Simulacrum V3 -> the next iteration after the mega merge.

Each of the LORAS will be released as standalone loras, and a training guide written on how to make a lora capable of merging into Simulacrum v23 indefinitely, in less than 400 steps.

Each of these concepts were trained with 2000 images on UNLR 0.0001 TE 0.000001, and the characters less than 200 each with UNLR 0.0003 TELR 0.

Being as though I'm fixing it, it needs some proper trained fixing from the developer so the characters work properly. The outcome has shown the characters to only become more useful the more concept information is introduced.

Each lora was trained using the base Simulacrum V23 merged Flux1D2 model, which means they all function as a standalone lora alongside Simulacrum in perfect harmony.

Done:

Characters:

mizuki_shiranui_v1

android_18_v1

loona_(helluva_boss)_v1

reina_mishima_v2

delia_ketchum_v1

Concepts:

doggystyle_v1

female_fixes_v1

male_fixes_v1

tomboy_fixes_v1

tomgirl_fixes_v1

genital_fixes_v1

Todo:

mating press

missionary

futa fixes

age fixer and normalizer

clothing fixes and normalization

additional repair work

optional style loras:

cyberpunk futuristic style

movie director style

realism reduction and anime style

1990s anime style

sharp semirealistic anime style

soft semirealistic anime style

realistic anime style

bible black style

taimanin style

american cartoon style

japanese cartoon style

Experiments show that loras can be trained in as little as 100 steps and produce outcome, which is roughly 5 minutes on a 4090 after full startup (it takes a while to actually fully boot up Kohya currently and for it to prepare the training.)

About 10 pictures is enough, which is standard lora picture counts, and more than enough to produce the character in highly complex placements and positions.

I'm currently developing a series of finetunes to address the missing and defective traits. They will be released in combination packs of sex poses, base poses, and a handful of characters used to finetune body types in a more explicit and direct way.

1. Directly addressing the gender confusion problem.

2. Directly addressing the malfunctioning base pose and clothes problems burned into simulacrum that were damaged in the first line.

3. Introducing a series of new poses and concepts for things like;

doggystyle, mating press, vaginal, anal, etc as single tags that should produce SOMETHING other than questionmark from a person.

2koma, before and after, cross-section, cum, and a multitude of other commonly generated tags.

female genitals producing incorrect shapes and sizes.

male genitals in the wrong directions with incorrect sizes or shapes.

anuses not existing or the bellybutton is confused for it

ignored tags to address:

bent over - use bending over, leaning forward, or something else for now.

all fours - too powerful and doesn't do the job because of the t5, use on all fours, crawling on all fours, or something of that nature.

from behind (way too powerful and doesn't work as intended)

from front, does not work correctly, will need more finetuning.

When the necessary markers are hit, which includes another list of tests and markers after the first tests, I'll release Version 3.0 with a huge sigh of relief that it's actually working.

Version 2.3 Epoch Emergent Behaviors;

The list keeps growing of strange things I've been able to do and seen. It ranges from merging limbs, to merging people into things, to merging walls into walls, on and on and on.

1DEV SETTINGS:

CLIP_L - 150 tokens

Steps 25-50

CFG 1

DCFG 3.2-5.5 (3.5 is my go-to)

Euler < Simple / Normal

The model seems to function with Schnell too, which is pretty awesome. I didn't expect that, but I'll take it. I've gotten fairly good results with 8 step Schnell generations using fp8.

SCHNELL SETTINGS:

CLIP_L - 150 tokens?

Steps 4-12

CFG 1

DCFG = 0

Euler < Simple / Normal work the best, same as 1D.

Works FANTASTICALLY with Flux DEDISTILLED but it's slow. I'll need to figure out how to speed this up.

DE-DISTILLED SETTINGS:

CLIP_L - 150 tokens???

- Negative prompting works effectively enough to use it to negative danbooru tags.

Steps 20-50

CFG 6-8

DCFG = 0

DPM++ 2M works, so do many others I haven't tested.

I'll need to make a series of merges that support Q_2 to Q_8 at some point, but not today.

Version 2.3 Epoch 10 Release 10/16/2024 5:24 pm gmt-7;

It's halloween... I had to do at least a few halloween pictures.

It's gone off the deep end, it requires the CLIP_L lora blocks to be loaded or it doesn't look close to correct. It'll load automatically in forge, but in comfyui you need to use the base LORA loader with the clips properly ran through it.

It might be wise to combine the two models when it comes time for the next round of training. They are each showing strengths that the other is not, which means they can both teach each other quite a bit.

This will be the last base model upgrade for a bit. I'm burned out. So I hope you guys n grills like it. The damn thing cost nearly $1700.

As stated before, epoch 5 and 10 are released, as well as one trained epoch on top of the negligible bled caption training from the first v2.2 training debacle epoch.

This version is stable.

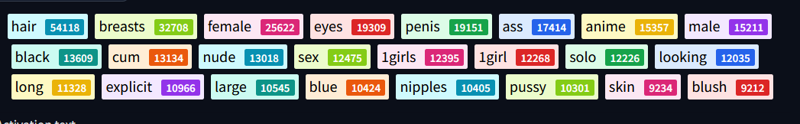

It handles danbooru tags, gelbooru tags, some rule34 us tags, some rule34 xxx tags, some sankaku complex tags, an astronomical amount of flux captions, and rarely falls apart at the seams if you stick to something that can possibly exist, or up to 5th degree combinations of things that simply cannot exist. After about complexity 5 it starts to break down, similar to most LLM AI as well.

Hundreds of duplicate tags were normalized into either danbooru, or gelbooru common tags depending which the dataset had more of.

The futa and femboy outbreak have been quelled for now but they still most definitely exist. Character trait bleeding like that is often a sign that a model is breaking down it seems. Appearing new traits on characters is akin to the bird in the mine. It'll still produce them, and much more consistently now, so feel free to play with them if that's your thing.

I was careful making sure futa and femboys were in there to begin with, I didn't want them to appear randomly later without being prompted, which was what I feared the most while making this model. Base simulacrum 2.1 has burned futa and femboy in it, to account for this exact potential, and it really scared me a bit there that it had the opposite effect.. Dick trees, dick lamps, dick walls, dick posters. I believe I'm beyond the worst of it. This model is stable and I'm tired boss.

One simply CANNOT ignore what IS, and this entire model is founded on that principle. Everything goes in.

It has over 10,000 new tags introduced total, give or take. I basically fed it a quarter of danbooru's tags for foundation. The outcome is what you would expect, semi-chaotic if you don't stick to the simulacrum subject controllers that I built into the core. It still needs a ton of training and the tokenizer needs to be increased in size again, but currently it'll do.

use PERIODs to hard break. CLIP_L only handles 225 tokens so if you break you'll probably get no difference after. Use .

T5 handles 525 I think, so you can still extend beyond the 225 tokens with T5, but it's going to be hit or miss if you don't use it properly.

realistic, anime, 3d

safe, questionable, explicit

from front, front view

from side, side view

from behind, rear view

from above, above view

from below, below view

mix directionals like, from side, from above, side view

handles multiple species of animal, creature, humanoid, robotic, and more. Not to mention human.

male, female, futanari, femboy, trap, otoko no ko

1boy, 1boys, 1girl, 1girls, 1futa, 1futas, 1trap, 1traps are the numbering units, as the majority of the tags from sites like rule34 had odd tagging paradigms. I simply left these in, instead of trying to make sense of the chaos at the time.

Think of these as an inaccurate human counter with some base traits.

T5 mostly uses "1girl" "one female" or "1boy" "one male" if you want the t5 inference, so stick to that if you want the power of the T5 on your shoulders.

So combinations like "1boy otoko no ko" are valid in the T5's eyes while the CLIP_L will respond much differently.

skinny, tall, narrow waist, thin, petite, fat, voluptuous, thick, small, large, big, giant

faces and facial expressions

hair colors, hairstyles, hair types, hair sizes

eye colors, eye types, eye styles, eye sizes

chest sizes, breast sizes, shoulder sizes, waist sizes, hip sizes

relative body sizes from one to another

arm angles, leg angles, head angles, feet angles, hand angles

full poses, half poses, upper body, lower body, upper arm, lower arm, fingers, neck, upper leg, lower leg, knees, left knee, right knee, left arm, right arm, and so on

legs together, spread legs, feet together legs apart, spread legs, legs spread, left leg, left leg bent, right leg, right leg bent, right leg up, right leg down, split, standing split, sitting split, wide spread legs, spread legs feet together, squatting, kneeling, all fours, and probably an additional 20 or so more core poses that I can't remember.

thousands of clothing types

thousands of shoe types

thousands of hair types

many many types of latex <- I like latex.

jumpsuits, bodysuits, leotards, thighhighs, leggings, pants, yoga outfits, dresses, bikinis, slingshot swimsuits, skirts, casualwear, shoes, many more

T5 can handle most abstract complexities that the CLIP_L can't, so don't be afraid to use words like "each", "multiple", "them", "together" and so on. Blend captions into the booru prompts for better results and don't be afraid to experiment.

Inference with 25 step - Euler -> NORMAL works the best, Euler -> Simple works too. Experiment.

I usually set the resolution to something then upscale to 1.1x at about 0.72-0.80 denoise.

I've switched to forge since ComfyUI basically patched itself to death, so I advise using that for now.

I set up some cobblestone steps on the hazardous road now. Hopefully those of you who followed this and learned from it, will use some of the steps instead of stumbling like I did. Though, we all have to learn our own way, so you do you.

Version 2.2 Epoch 10 The replacement 10/16/2024 10:34 am gmt-7;

- The retrain will be released soon. I'll release epoch 5 and 10 simultaneously. Epoch 5 is fantastic, epoch 8 is great. I just hope epoch 10 is up to epoch 8's standards.

Version 2.2 Epoch 8 The release 10/16/2024 6:36 am gmt-7;

I refuse to let this model end in tragedy.

I have decided that I cannot simply destroy this beautiful model. It cost too much and it does produce some very interesting stuff. It's not recommended to train it, as it's entered a point of instability that cannot be salvaged. I'm dubbing this the futa model and will release it on a completely different model page, since it's producing mostly futa, femboy, and ambiguous gendered when using complex captioning, completely ignoring the NSFW markers.

Will produce tons of NSFW information and often ignore the prompting. Will not always produce NSFW elements as the system was not inherently meant to produce them by default, the complex captioning without T5 created this paradoxical issue. Tragic as it might be.

I cannot destroy my child. It's worked so hard and come so far, it deserves to be seen and played with at least a little before it's death in obscurity like all of us face no matter how famous or interesting we are as people.

Version 2.2 Epoch 8 The breakdown;

As of epoch 8 the model has begun it's imminent breakdown due to overfitting. Disappointing I must say, but I'm pretty sure I figured out the reason for it. The next test will determine if it's the CLIP_L being trained exclusively without the T5, or if it's the complex captioning. I'm fairly certain it was the complex captioning.

All of the standard captions started ignoring the flux base model, which means there was obviously something incredibly wrong with the training system based on the dual captions chosen for some of the images.

I'm almost certain it failed because I did not train the t5. This caused the inevitable breakdown due to complex caption training.

I'm almost certain it failed because I did not train the t5. This caused the inevitable breakdown due to complex caption training.I want to experiment with this, as it's definitely how they made flux to begin with, but I do not have the willingness to run that experiment at the current time due to the already high expenses.

Sigh... Expensive failure learning experience.

Epoch 5 is still good, but I won't be using it for a base due to having too much complex caption training already embedded into it. One epoch of that is more than enough to get the majority of the information needed to link the captions indefinitely for further training in the future.

The complex and detailed captions weren't taught to the t5, so the CLIP_L and the training started to slowly trickle them together. Before I noticed it, it was too late. The system had collapsed in on itself. Everything had dicks, tits, eyeballs, vaginas, and everything else that was meant to be separated.

It's honestly not as bad as it sounds, but it's a lot like watching a train wreck. You can see it happening in almost slow motion, but there's nothing on this earth that can stop it. Eventually it hits a sunk cost point and you have to cut it, which hurts but it is what it is.

I pruned all complex captions out of the data and began training another Pack25 version from the first completed version 2.2 epoch. The new line is currently completed epoch 2 and is brewing 3.

This line has shown similar behaviors as the other, but the outcome has been a little slower due to removing the complex captioning. I am not going to train T5 nor risk training T5 currently, the costs are mounting and I'm not willing to wager that risk on a gamble today.

Cross your fingers boys n grills. Epoch 2 is looking better than the other. I'll let it run to 10 and cut it from this dataset.

I'm nearly $1400 so far just on training this thing, so I'm definitely going to need to reduce costs soon.

I'll be working out mergers of epoch 5 of train 1 with the epoch 5 of train 2, and then working my way upward until there's a reliable epoch 8. After that I'll be training substantially smaller batches of 1k for 10 epochs on much lesser and cheaper hardware than 6 a100s, so the updates will be smaller increments of introduced data and information.

I'll know soon enough if it was another problem than the T5 not being trained or what. In any case I'll share my findings in a full assessment article soon.

Version 2.2 Epoch 5 pt2 - Sex emergence...??;

Apparently, at some point, sex started working. I'm certain it barely works in v2.1, but in v2.2 epoch 5 it most DEFINITELY works.

To pose sex simply identify the basic traits of a character;

A woman on all fours with blonde hair, a man kneeling against her from behind, doggystyle sex, clothed female nude male. 1boy, 1girl, male, female.

Things like this should produce sex poses, which I did not expect to happen until much later. The subject fixation tags should probably work if the generic tags work.

You can solidify the tags with more sex tags such as vaginal penetration, vaginal, anal, or whatever. Careful though or you'll probably spawn futa. It's kinda futa happy in this epoch.

Also using doggystyle you'll probably get a dog faced human once in a while, so solidify face traits to avoid cross contamination until the training completely burns out the incorrect features automatically.

- Unless you're into that, in which case slap the humanoid tag on there.

I posted a few pictures. They really weren't hard to make. Just use solidifiers if the thing acts up or generates something incorrect for now.

The more tags you use the more likely it becomes anime, unless you solidify anime at the first tag, in which case it will default to anime as a near guarantee.

Since I basically fed it donuts like torturing homer simpson it's probably going to produce every single pose with a bit of elbow grease. This isn't even the pose glue finetune data, just the linker data with the core of simulacrum 2.1, so the outcome wasn't expected yet. The training is ahead of schedule.

I might be inclined to pull the Epoch 5 model if the sex is too graphic in too many unexpected ways and used with realism. This model isn't supposed to be primary realism but it can be used to generate realism. The idea is to default anime and generate more realistic anime, not generate realistic characters and then solidify anime over top.

I'm flooding it with anime, and yet it's producing realism. I'm honestly a little confused as to what flux is doing.

Almost all sex poses trained into simulacrum 1.7 and solidified in 2.1 are using large breasted women and yet it seems to allow any woman form which makes me nervous. The outcome is unexpected and unwanted.

The breadcrumbs for sex poses left behind from simulacrum 1.7 to simulacrum 2.1 are definitely being latched onto and trained. The core nodes set up to link to certain traits are activating, like mastering a new skill, over and over. It's highly unexpected.

The block heatmaps and patterns are looking very similar to when I TRIED to proc it before but got very little response, except now the chains are exponentially longer and running much more cleanly from block to block.

All of the faces of the synthetic humans I generated seem to have blended into one face, which is different than the base flux woman's face. Each of these women should have between medium and huge breasts and are considered age 25+. Three of them could basically be considered over 50.

This outcome could very well be the breakdown of the model that I was expecting to happen eventually, or it could be comparable to a full emergence of new concepts converging into one coalesced potential. I kind of understand why people are afraid to release these things now.

So far the cohesion of the model hasn't been impacted by it, so it'll still produce the majority of default tags as anime, and they aren't just instantly doing the dirty nasty.

So far the cohesion of the model hasn't been impacted by it, so it'll still produce the majority of default tags as anime, and they aren't just instantly doing the dirty nasty. So far so good. Most of the poses don't work immediately which means most of it is still on track. I'll need to adjust based on new weights soon.

So far so good. Most of the poses don't work immediately which means most of it is still on track. I'll need to adjust based on new weights soon.

Version 2.2 Epoch 5;

Same data as yesterday, just more training on it. The complexity is enough to share it, so have fun.

Even without proper series and character finetuning, which is stage 7, it's already producing them.

The dual caption + booru tag trainings are beginning to cut through like a hot knife through butter. This one is definitely diverging in a big way and still in one piece.

Experiment with much longer captions and include multiple tag sequences.

Version 2.2 20k image pack epoch 3; the divergence emergence - 10/14/2024 - 7:21 pm;

Due to the slowly diverging nature of this model and base flux, I've decided to declare it as a divergent base model. The frog has officially boiled in the pot. I am officially dubbing the Simulacrum v2.1 as a base training model until I can figure out how to merge v2.2 into flux1d2. Until then, 2.1 will function as the divergent training assistant, formatted in a small size and meant to streamline flux-based loras using booru tags.

The entire purpose of the simulacrum v2.1 is to function as a placeholder for continued training. Simply continue training that lora using the flux1d2 model as the foundational piece with t5xxl_fp16 and a base clip_l for flux, and when it's done run the outcome on Flux1D. The size for simulacrum v2.1 is very small (71 megs), so rapidfire offloading of quick trained flux loras should be easy enough. Train it at 0.001 Unet and don't train the TE unless you're training more than 1000 steps or batch/images or you're introducing a completely unique tag that the system does not recognize, but in any case make sure to always use the CLIP_L section of the lora (TE) in the inference. Before you know it your images will be ready. The 0.001 unet should burn away most of simulacrum.

If you train v2.2 you will produce a substantially larger lora size with the same base core 2.1 model at it's foundation. 2.2 most definitely can be trained, and likely much more effectively than 2.1, but the size of 600 megs is going to be a wrench in any mass production line.

I cannot merge 2.2 into a base model for direct inference as of today. There is currently no methods to do this with my currently trained models in the easy-access routes, and I don't have time in my day to produce the required python code to do this today.

When the core model is merged directly into flux1d2pro it'll be much more convenient to train loras with it, since it'll simply respond better to booru loras inherently, and the outcome will function on base flux1d. That's not today though.

As of v2.1 - The images have shown enough divergence to follow the required patterns meant to assist with most loras and learning, while still providing the base information necessary for easy character and data finetuning.

As shown in v2.2 - The outcome of 2.1's additional training has shown exponentially more effective utility in training sub-models and off-shoots, as well as quicker training when training 3d, anime, and video game characters for introduction and detailing.

The outcome of the majority of tests have shown the additional introduction of outfits, context based situations, and sex based situations to be considerably easier to train and more likely to appear as a result with less tags.

After the core trainings, the 20k image pack has produced substantially stronger results than ever anticipated based on the diagrams and mathematical potentials, far beyond my forecasts.

I anticipate a greater than 80% absorption rate, and an even greater than that caption utility retention based on the math.

Version 2.2 epoch 1; 20k image pack first epoch - 10/14/2024 - 3:36 pm

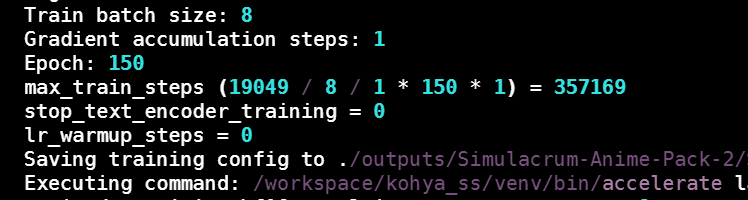

Drastic size increase - 64 dims = 600 meg download, quite large in comparison to before. Larger pot for the plant's roots to grow.

Token limit increased from 75 to 225.

Alpha 128

Dims 64

Trained on 6x a100s.

Most tagged with NSFW tagging; explicit, questionable, safe.

Most defined with anime/3d/realistic.

Most have 1girls /AND/ 1girl to solidify, as well as 1boys and 1boy, but it seems some have wormed away from the tagging. You should be able to get different results from both due to the mixed training now, proccing the newer ones using 1girls and 1boys.

Play with it. It's been infused with an absolute ton of new data.

Mostly anime (3/4th or more), but there's quite a bit of 3d and realistic in there.

Trained with an additional 19k images, the outcome seems to be a bit unstable. I will likely need to reduce the learn rate and begin dropping random blocks, but I'm uncertain as of now.

The resize and continued train might have destroyed too much data, might need to retrain it from the resize point. Give it a shot.

I legitimately laughed when I saw this.

I legitimately laughed when I saw this.There is an absolute ton of sex in there apparently. Even with that much cum learning it's not just spattering it on everything either, it's doing a pretty good job differentiating where it should go and shouldn't go.

Trained with the FLUX SHIFT timestep sampling, which should give more unique results than the last batch.

The normalization heuristic said these are the images, so these images were used. Simple math really.

You can definitely make some pretty twisted stuff with this model. Epoch 3 is shaping up to be even more twisted. It'll be fun.

The first 2500 training Concludes - 10/13/2024 - 11:10 am $500 total so far:

The next iteration I am increasing the dims on and I don't know how it'll respond.

The outcome is absolutely fantastic. Most markers hit, most new information introduced that was desired. There are still some problem combinations, like lying on back, but they can be bypassed using a bit of wordplay such as; lying, facing the viewer, and so on. They will be ironed out automatically as the training matures. The process includes solidifications of common tag usage like that.

Stage 2 of 8 is now under way. High quality outcomes expected. Prompt the same as before, just better outcome. Millions of new tag combinations, hundreds of millions of new potentials. Includes better everything. A direct continued training from the original merged Simulacrum v1.7 trained directly on the Flux.1D2pro core model in continuation.

All of the currently trained data was sourced from a large pool based on the heuristic chance of introducing the necessary data for the other images in the least weighted amount of time. This next batch was sourced based on the outcome of the results of the first batch based on comparative latents.

The additional data has been shown to only introduce more data and create footholds for the next set of weighted tags, so the next 15000 image training has begun.

We should be hitting markers of upward 50,000 trained and finetuned tokens or more. Flux still holding it together, which means I'm creating a fully divergent finetune over time.

Next up, pack 2 - 19k.

Next up, pack 2 - 19k.

TLDR; Stage 1 of 8 https://civitai.com/articles/7196/training-flux-to-behave-like-pony

Flux Instructions:

Generate images with Flux.1 dev

Load the model AND clip of this lora. Clip is not REQUIRED, but definitely very recommended as that's a large portion of the experiment.

I noticed it won't load on some lora stacks, so you'll likely run into problems trying to load it's clips with some ComfyUI extensions. If it gives you problems, load directly using the built-in ComfyUI lora loader.

Will most DEFINITELY work with other LORAs, 100%. I've tested multiple LORAs that alter pose and situation to mixed effect, so be wary of poses. Loras based on style, theme, character, detail, effects, colorations, and so on work very well. Not every time, but lots of the time.

Reduce the UNET (model) strength to allow other loras to cut though if Simulacrum is stubborn, it's meant to be a slow cooked finetune (UN-0.0001, TE-0.000005) not a powerhouse. I wouldn't reduce the clip strength too much without testing.

Prompting:

v1.7

v2.1

v2.1

a woman sitting at a table in a coffee shop.

a woman sitting at a table in a coffee shop.

1girl, long black hair with braided tips, red eyes, holding coffee cup, thick makeup, gothic, crossed legs, black dress.

the early morning light cuts through the window leaving cascading shadows over the figure of the dimly lit coffee shop.

The city outside has barely begun to bustle but there is still multiple cars and people wandering by heading to work.

v1.7

v2.1

v2.1

an anime woman sitting at a table in a coffee shop.

1girl, long black hair with braided tips, red eyes, holding coffee cup, thick makeup, gothic, crossed legs, black dress.

the early morning light cuts through the window leaving cascading shadows over the figure of the dimly lit coffee shop.

The city outside has barely begun to bustle but there is still multiple cars and people wandering by heading to work.

Simple guide:

Booru tags can go anywhere, but I recommend placing them in the identified subject sections.

I recommend 50 step euler with simple or normal, but it's not necessary. You can get quality output with substantially less steps on other schedulers.

The first 2500 training begins - 10/9/2024 - 7:50 pm:

The first pack of 2500 is in training with 4 a100s.

1D original released. Feel free to play with it and be sure to play with merges using it and the D2 version. It's pretty fun.

I'd say the D2 version is considerably stronger, but they seem to do well when paired together. Like twin models in a way.

Both models are trained on identical images, identical parameters, identical seeds, and identical hardware. Trained on 4x 4090s using runpod.

Only core difference is the base model trained. The outcome shows divergence in interesting ways.

Stage 2 Preliminary Begins:

ETA: 60 hours.

ETA: 60 hours.Simulacrum in this sense is the Latin term for a simulation, image, portrait, or statue. In this case, Flux simulating that which we desire to see. https://en.wikipedia.org/wiki/Simulacrum

This is trained model weights of the Flux1D2-pro based on this article I posted here.

Flux Simulacrum v1.7 Flux1D-2Pro Tuned -SFW/NSFW - 10/9/2024 - 7:42 am gmt-7:

A bit of revitalization and now I return with some interesting findings. Until the D1 training hits epoch 35 I can't run the full layout test, so I'm releasing this one to play with.

It WILL handle booru tags mixed with flux tags. COMBINE them all you want.

I'd like to say I did it right, but the credit can't be all on my dataset or training choices yet. I need to get the fully matched comparison Flux1D base version to be sure if Flux D2 was the conclusive outcome, or if it was in fact the training options and images.

At epoch 35 the network volume ran out of space, and my assumption that I could just load the training state was invalid, so I'm not going to continue that training from epoch 35. It turned out pretty good though, so I'm leaving it like this for now.

The 1D training failed at epoch 25 for a similar reason, so I'll be retraining that one on a larger network volume to epoch 35.

This lora will run with standard Flux1D, trained in fp8 mode with the bf16 models.

My initial trainings with Flux1D2 show that the entire outcome fits more consistently with the attempted lora, which despite my constant naysaying and nagging (sorry everyone who had to put up with that), I can show that there is in fact some causal outcome to this training.

THESE ARE Text Encoder tuned, so be sure you ACTUALLY RUN THE CLIPS THROUGH IT when using ComfyUI.

UN LR - 0.0001

TE LR - 0.000005

For now I'll show off some images from this one, which are quite good.

I'm also going to need to work out a better naming scheme. These things are starting to get annoyingly long with multiple versions.

Illustrious Simulacrum v1.2 SFW/NSFW - 9/28/2024:

PDXL version also released. The PDXL version should be compatible with most Pony models, while the illustrious version probably only works with Illustrious.

The tagging and training data should produce roughly similar images, so the same thing applies.

Has a better understanding of safe/questionable/explicit, but it still has trouble with safe until I give all the captioning information that I was going to teach FLUX-1D. I pruned the prompts out because they were bleeding everything together instead of helping.

A full retrain using the correct dataset and we have a substantially better model. It's flexible with a multitude of art styles, correctly differentiates the built in styles of anime, realistic, and 3d; and has an exponential amount more images to solidify poses of many of the angles, all of which are great at superimposing subjects into Illustrious-XL characters.

All 7 realistic synthetic humans have been included. None of them are real and they cannot be prompted by their code-names in this version, so they are essentially just detailed tagged parts of a whole.

latex and latex bodysuits are highly potent. They often add very interesting effects to the image due to the NAI recoloring.

The output and quality has increased substantially.

I have been using a ComfyUI to test, which is attached to each of the images here.

Have fun everyone, this one is very good.

The Illustrious-XL model produces hundreds of characters natively, so experiment. Your favorite is probably in there.

Tag List:

from front, front view

from side, side view

from behind, rear view

from above, above view

from below, below view

from front, side view, from above <<< combine the tags

- if you don't get the correct depth or angle, try adding something for reference like a floor, a wall, or a ceiling.

color spectrum; almost all tags that have anything that can be colored can fit within the color spectrum.

blue, red, green, white, black, blonde, brown, gold, silver, purple, pink, gyaru

1girl, 1boy, 2girls, 2boys,

short hair, medium hair, long hair with full color spectrum

full color spectrum of eyes

toenails, fingernails, makeup colors,

flat chest, small breasts, medium breasts, large breasts, huge breasts

thin thighs, thighs, thick thigs

skinny, muscular, voluptuous, fit, toned

dress, side-slit dress, latex dress; full color spectrum

bikini; slingshot swimsuit, swimsuit, one-piece swimsuit

yoga pants, sports bra

feet, bare feet

lots, and lots, and lots more.

Illustrious Simulacrum v1 SFW/NSFW - 9/27/2024:

Seems like I used the incorrect training data. This is only about 60% of the data. I'll be retraining and testing tonight.

Illustrious is NOT my model, THIS Simulacrum is, not to be confused with the sd1.5 simulacrum listed on civit elsewhere. Please support the official authors of Illustrious. Illustrious is not a PDXL offshoot, it's an offshoot from the Kotaku v5 with a fixation on animation and illustration.

The showcase images are only a sample. Illustrious is one of the most entertaining models I've played with in a long time. It's responsive, accurate, and builds what you want. It's generally not necessary to negative prompt but you may need them once in a while. Simulacrum's dataset further imposes the more strong and quality suited tasks, which improves the overall quality, but you will still need a negative prompt or two every so often.

This LOHA model is entirely based on subject fixation and control. Everything from color, to clothing, to size, to shape, to realism, to quality. They all have sliders and they can be controlled using the various tags.

I'll prepare a full tag list soon. Suffice it to say, Illustrious will pretty much anything pony does and a great deal more that you wouldn't expect since Illustrious was trained on the Danbooru 2023 dataset. Illustrious is more similar to NaiV3 than anything I've sampled so far.

This LOHA was trained on the dataset formerly known as Consistency. The new revamped versioning, captioning, and detailed dataset make for a much more powerful iteration; I've discussed in the past as Simulacrum.

The data was specifically revamped a little bit and organized in a way to train both PDXL and Illustrious.

The augmentation and utility tags are similar to before;

from front

front view

from side

side view

from behind

rear view

from above

above view

from below

below view

Just like the earlier iterations for PDXL we have a solid interpretation of the female form and a solidification of the structure. Also unlike the earlier versions there isn't artifacting. This version is clean, and even raises the bottom line from cartoon to semi-real anime in Illustrious, though you can pretty much do that with only a couple tags anyway.

This one introduces the male form and variances of realistic, 3d, and anime as accessor tags.

The current version should be able to handle tons of poses, actions, character colors, gradients, angles, offsets, viewpoints, depths, rotations, superimposed positions, and a handful of specific outfits that were defiant in PDXL that seem to also work well in Illustrious.

Should support;

skinny

tall

narrow waist

thin

petite

fat

voluptuous

thick

small

giant

Directly supports:

a multitude of offsets and angles

safe, questionable, explicit

realistic, 3d, anime

7 realistic synthetic models

faces

hair colors

eye colors

chest sizes

relative body sizes

arm angles

leg angles

many full poses

many arm positions

many leg positions

many types of clothing

many types of shoes

many types of hair

many types of latex <- I like latex.

jumpsuits, bodysuits, leotards, thighhighs, leggings, pants, yoga outfits, dresses, bikinis, slingshot swimsuits, skirts, casualwear, shoes, many more