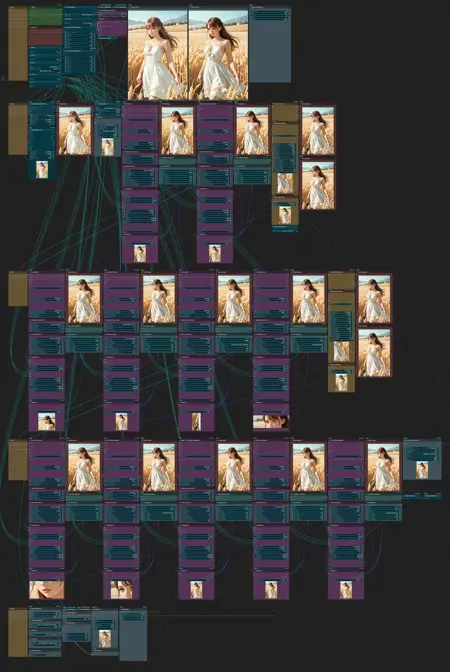

ComfyUI Pony/SDXL/Illustrious Easy-Use Detailer Workflow (now with video generation!)

Details

Download Files

About this version

Model description

If you are getting errors about the weights_only setting, add your detailer .pt files to the following location, one per line:

ComfyUI\user\default\ComfyUI-Impact-Subpack\model-whitelist.txt

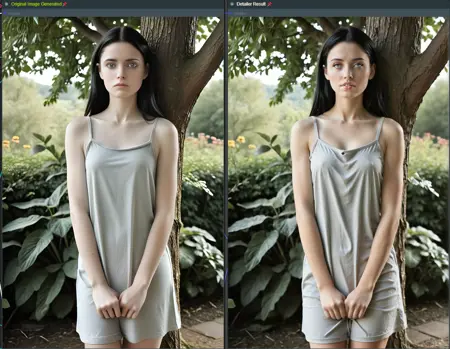

This is a workflow designed to generate portrait style images using the standard Stable Diffusion tools (ControlNet and Lora/LYCORIS) and add detail to them while only using a minimal amount of different custom nodes, and keep the workflow simple and straightforward enough that it's easy to add and remove nodes based on your needs.

The idea is to generate a base image you like, and then let it run to improve it. That way you can abort images at the first step until you get one you want to move forward with, then walk away, confident that the end result will be a better version of that first step every time.

Welcome to v4 (PLEASE READ THE VERSION NOTES)

Video generation stage resources can be found here:

https://blog.comfy.org/p/wan21-video-model-native-support

-

Version 4 adds video generation based off the final output and the initial prompt using the Wan2.1 advanced video generation project.

The hardware resources required for this are very high, the process is slow, and getting good output requires a different thought process for your initial prompt than when just generating images.

I wouldn't recommend trying to do video generation on anything less than 16GB of VRAM and 48 GB of system RAM. Even with those, it can a lot take longer to generate a 5 second video than it does to do the rest of the workflow, which is a pretty long process as it is.

Make sure your first prompt has a clear action involved, or Wan will randomly make the output do things than no human can, would or should do.

See the text block next to the video portion of the workflow for more information.

Also added are new global configuration value cards for seed, steps and cfg. This will allow you to change one value for them and have it be the one used throughout the image generation portion of the workflow. This means you can now easily use a static seed to fine tune a single image through the detailer steps without having to change the seed in each step. While I still don't recommend setting the cfg or steps any lower than they are set at, if you want to (say, because your hardware is taking too long at 30 steps), the option is there.

-

V2+

This workflow can be manipulated at each step, adding more details that are relevant at that level. This includes being able to add text prompts, adjust clip, and even add Loras at each detailer stage.

Adding a Lora to a detailer step does require special consideration, however. Due to the way the Ultralytics detection works, you are limited in what Loras you can use at each stage - in short, any modifications made by the Lora must fit within the detection window. In general, this means that any large modifications you want to make (such as increasing/decreasing the size of an element of your image) must be done one level above that element's detailer or at the top level. For example, if you wanted to apply a slider Lora that increased the size of the eyes of the person in your image, you would need to apply it to the face detailer, not the eye detailer (the eye detailer would instead be a better place to use a lora that adds details or realism to eyes). Similarly, a Lora that changes the size of breasts would need to go into the body detailer, or at the top level. I've left a few of my favorite Loras populated to give examples of what works well in each stage.

I have also added a LUT node to the workflow at the very top of the detailer stack. While this is completely optional, adding a LUT can be truly transformative to your work, especially when it comes to outdoor images where they can add a cinematic "pop" to skies and water. LUTs can be downloaded for free from https://freshluts.com/ - I recommend looking at Rec 709 gamma models, as they are intended for general image tweaking rather than output from specific cameras.

Lastly, I added a final upscaler pass using the excellent 1x_antialiasing upscale model to create a slightly cleaner final look. This doesn't make the final image much larger, it just functions as a clean-up pass.

Keep in mind that the more Loras you add, the larger your memory footprint is. If you are VRAM constrained, be careful how much you add.

Thanks, and I hope you enjoy the upgrades!

-

V1+

I designed this workflow to not hide anything in the pathing - the connections between nodes is straight with no extra blocks, and as few connections as I could get away with while maintaining as much consistency as I could across them, with only 2-3 node links needed between steps.

I have focused on removing variables that make large changes to the original generated image, so you don't end up generating a base image then coming back several minutes later to find a result that doesn't look anything like it. There are also "save points" (image previews) along each step that allow you to pull a workable result from your work if one of the detailer steps goes awry.

The workflow as delivered is aimed at NSFW Pony/Pony Realism models, but will work fairly well with SDXL models as well without changes.

If you want to use it for SFW images or one of the detailers is consistently failing to give you the results you want, you can change the bbox detector to a different one, or simply bypass the detailers you don't need by re-routing the 2-3 link lines that come from the previous step past it.

Additional detailers can be added to the workflow by simply unpinning and cloning one of the existing detailer node trees and connecting it the same way the original was, taking care to place it in the workflow in the appropriate section. This means that full body items like clothing detailers should be placed before the first upscale, things the size of a head or face should be added before the second upscale, and small details like individual nipples should be done after the second upscale. Separating your ultralytics in this way gives you the most amount of data to work with for each detailer pass while keeping the amount of unwanted results to a minimum.

Note that this workflow is not a fast one; average complete generation time for a single image on my system (R7950X/64GB DDR5/RTX4090) is between 6 and 10 minutes. It's designed for high-VRAM systems (16GB or more, but it may work with 12GB GPUs), so if you have less than that your system will page into system RAM and run considerably slower or may not work at all. You can reduce the VRAM needed by changing the base image size, but if you do, make sure you also change the upscale tile sizes (the first upscale pass tile sizes are equal to your base image size, the second is 1.5x the base image size) and the resize width field in the Upscaled Original portion of the workflow to equal the width of your final output image. You may also wish to consider setting the guide_size and max_size of each detailer to something smaller, like 1024.

I haven't tested it, but it should also be able to be tweaked to work with SD1.5 by adjusting the parameters in the loader to be appropriate for SD1.5, changing the upscale tile sizes and resize width as above and setting the guide_size and max_size of each detailer to 512.

Changing the sampler you use with this workflow from DDIM to something else will work, but results will vary based on the one you choose - some blend better with the detailer than others, and others will cause changes to develop as the image passes through the workflow. The choice to use DDIM was deliberate here, it causes the least variations of all the models I have tested and generates good output with realistic, semi-realistic, cartoon and other subject matters. If you decide to use something else, I recommend avoiding ancestral samplers, as when run through a multi-generation workflow like this one they tend to give results that can vary strongly over each pass. Whatever sampler you choose, keep it the same across the entire workflow to make sure that the results remain consistent. The same goes for the number of steps and the seed, if you change it, change it everywhere. For the CFG keep it at or near 10 - lowering it will cause inconsistencies to develop through the workflow.

Massive props to yolain for the incredible work being done on their easy-use nodes (https://github.com/yolain/ComfyUI-Easy-Use) that are the basis of this workflow. I've consistently been able to solve various difficulties in other ultralytics detailer nodes and level out any unwanted tone changes to the image using just these nodes - it's truly amazing stuff and more is being added every day at this point.

Other resources used:

ssitu's ComfyUI_UltimateSDUpscale (https://github.com/ssitu/ComfyUI_UltimateSDUpscale)

uwg's SwinIR upscale models (https://huggingface.co/uwg/upscaler/tree/main/SwinIR) - seriously, if you aren't using these, you should be.

Ultralytics detector models for use with this workflow can be found here on Civitai, on huggingface.co and other places. Since most of them are .pt files, the fall under the "use at your own risk" category and I won't directly link to them.