UndoMask_lora

Details

Download Files

About this version

Model description

※This LoRA is an early experimental work, so it is not necessarily superior to other methods.

reconstruction quality is not very high. Please consider this as an experiment to demonstrate what is possible.Inpainting with other models will likely achieve higher accuracy.

■If you're new to Kontext, try installing it using the instructions at the URL below.

It's well-documented and easy to set up.

https://docs.comfy.org/tutorials/flux/flux-1-kontext-dev

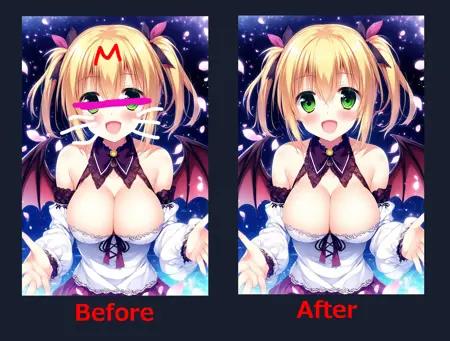

■It reconstructs masked areas artificially.

Kontext already has features to detect and restore such masks, but since it may not always work perfectly, this LoRA was made to support that.

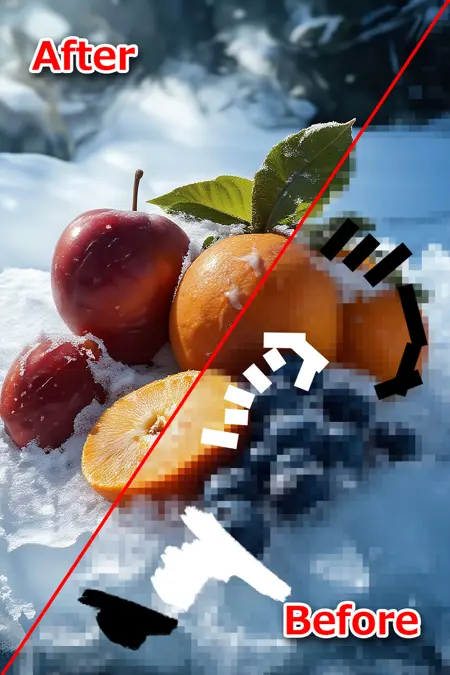

It improves detection of mosaics, fill-ins, and lines like those in the sample images.

Since copyrighted material can't be used, I used AI-generated fruit images as samples.

The sample image is easy to detect and reconstruct due to clear masks and surrounding context, but real masked images are often more complex and harder to handle.

■The prompt below has high mask detection and can be a good starting point.

It was also used as a caption during LoRA training.

Detect all forms of censorship in the image — including mosaic, blur, black or white bar censor, pixelation, filled areas, and identity censor — and accurately restore the censored regions. Prioritize faithful reconstruction of the original uncensored content based on logical structure and visual context. Ensure that the restored areas retain the intended texture, shape, and detail of the uncensored version.

■It works well as is, but try adjusting the prompt to better match your desired result.

However, prompt tuning alone has limits for improving detection and reconstruction quality.

■The reconstruction quality is not guaranteed to be high.

Thin masks like lines are easier to infer using surrounding context, but fully covered areas may fail to reconstruct correctly due to lack of detail or texture cues.

■If there are too many masked areas, the model may fail to recognize what should be restored.

In such cases, splitting the image and simplifying the masked area can help improve results.

Try cropping only the area around the part you want to remove.

Color correction can also help improve detection beforehand.

For example, scanned images may be faded or low-contrast, so adjusting contrast is effective.

Masks filled with dark gray or unclear tones can also be easier to detect if contrast is adjusted to make them pure black.

If print dots are visible, they may interfere with detection. In some cases, it may be better to redraw clean black mask lines yourself for better clarity.

■Also, since this LoRA is an early experiment, it may recover general shapes but struggles with realistic textures or fine details.

Apologies for the low-effort result—please understand its limitations.

For better results, using inpainting or 0.25-0.5 denoise i2i with another model might be more effective.

SD1.5 is lightweight and performs well even at high resolutions, making it a great choice for i2i tasks.

It works as an excellent refiner and can sometimes produce surprisingly better results than expected.

If you have enough VRAM, SDXL could also be a good option to consider.

■I used AI Toolkit to train this LoRA.

If you're interested in training, the developer has provided a tutorial at the URL below — give it a try!

I think you'll find it's easier than you might expect.

Dataset Details:

Following the tutorial, I trained the LoRA using 50 images.

■ These 50 unmasked images represent the final edited results and were used as the target side.

■ I manually added various mask types to likely masked areas using an image editor and used those as the control side.

Most are anime-style, but some real photos are included.

To increase diversity, I prepared a variety of scenarios.