Sexiam - Img2img with Upscale

详情

下载文件

模型描述

图生图工作流(ComfyUI)

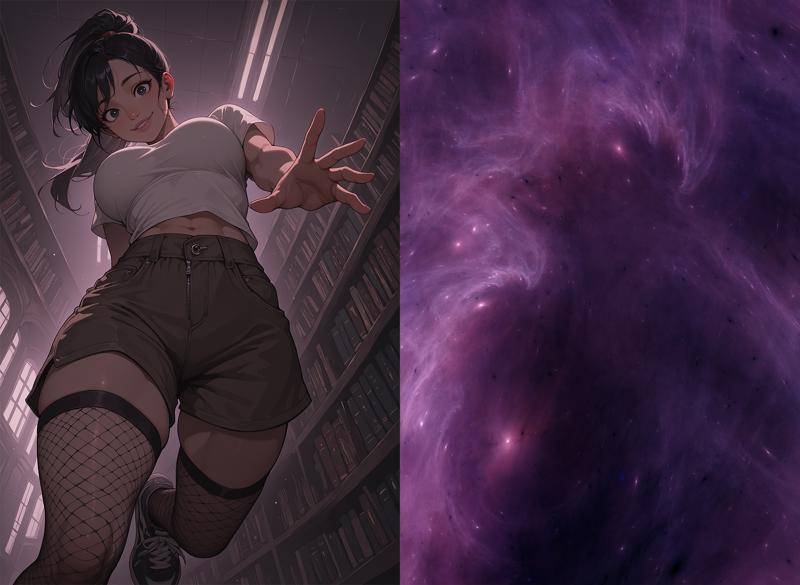

此工作流允许你将一张基础图像与第二张图像(如星云、分形或其他抽象纹理)结合,生成新的构图,而这些是仅靠文本到图像无法实现的。该设置不仅依赖提示词,还利用“驱动图像”引导模型进入意想不到的姿态、角度和布局,同时仍尊重你的文本提示和风格设置。

当你希望实现以下目标时,此方法尤其有用:

更动态的姿态(无需手动修补或姿态调整工具)。

独特的构图和镜头角度。

在保持一致性的同时,将你的角色或场景推向新领域。

适用场景

从现有基础图像生成新构图。

在尝试不同布局时保持角色一致性。

使用抽象图像(星云、纹理、分形、云层等)作为潜在创意驱动力。

内置高分辨率修复与放大功能——确保输出图像清晰、细节丰富且适用于生产。

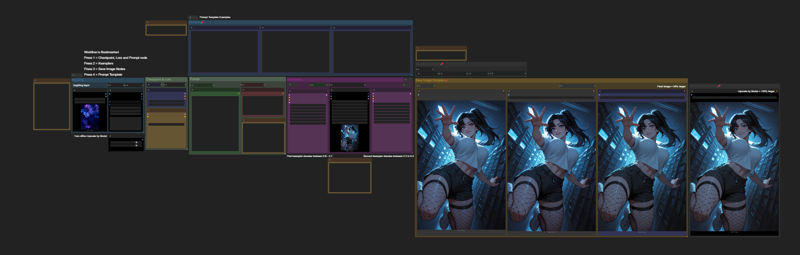

分步详解

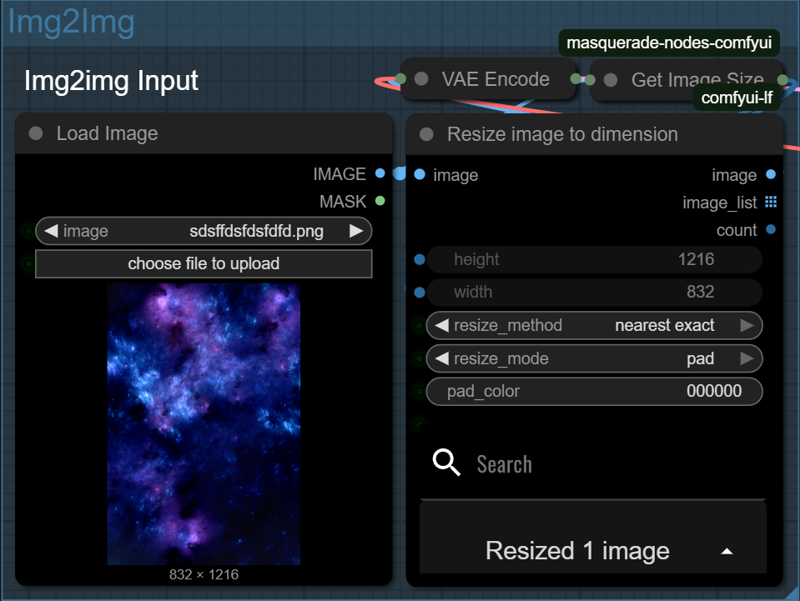

1. 加载驱动图像

首先加载你希望用作引导的图像——这可以是星云、分形、绘画或任何具有有趣形状和色彩流动的图像。该图像将决定你最终结果的构图方式。

节点会自动获取输入图像的宽高,并应用于生成过程。

为获得最佳效果,建议使用标准 SDXL 尺寸的输入图像进行图生图操作。请确保将图像裁剪至约 100 万像素左右。以下尺寸可作为参考:

1:1 - 1024×1024 - 正方形

3:2 - 1216×832 - 横版

4:3 - 1152×896 - 横版

16:9 - 1344×768 - 宽屏

9:16 - 768×1344 - 竖版

2:3 - 832×1216 - 竖版

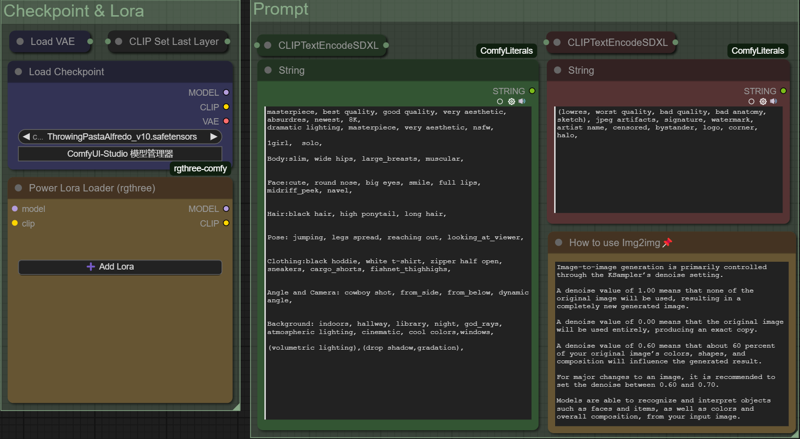

3. 添加提示词

现在是描述你希望看到的内容的时候了——你的主体、风格、光照等。你还可以编写负面提示词,以避免模糊面部、多余肢体或文字等问题。

SDXL 基础模型和 VAE 已加载,如需进一步控制风格,可添加 LoRA。

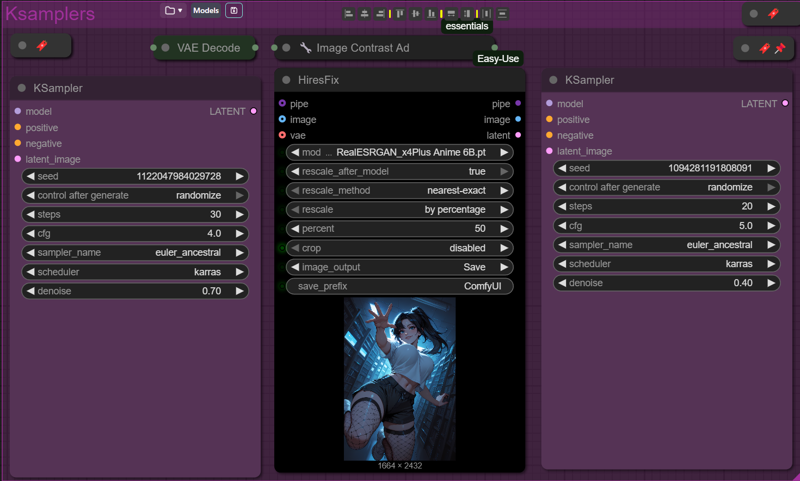

4. 采样与放大——构建与优化图像

此步骤同时处理基础构图与细节优化,包含两次采样和中间的内置放大过程。

在第一次采样中,KSampler 利用你的潜在输入和提示词生成强构图。此处使用约 0.6–0.7 的去噪值——足够显著改变图像,同时保留其流线与布局。

接着,图像被放大 50%。这不仅仅是简单缩放——模型通过高分辨率修复智能添加细节,锐化图像并提供更多像素用于处理。

最后,第二次 KSampler 使用较低的去噪值(约 0.4)进一步优化图像,在保持结构的同时增加纹理和微调。

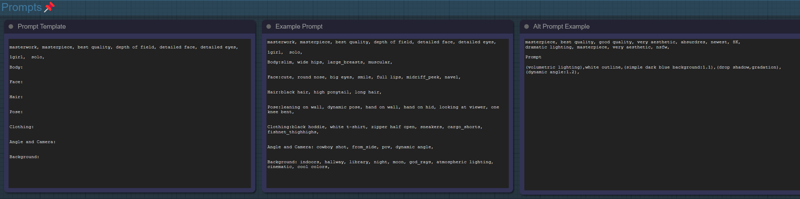

标签与提示词模板

此工作流已完整标注,便于使用。每个部分均包含注释,说明节点功能、工作原理或需调整的设置,使你无需逆向工程即可轻松排查问题或自定义流程。

工作流中还包含一个提示词模板,帮助引导你的文字输入,涵盖主体、情绪、光照和风格,为你提供清晰、一致且结构良好的提示起点。

以下是你可尝试用于此工作流的图像示例:图像尺寸为 832x1216 或 1216x832