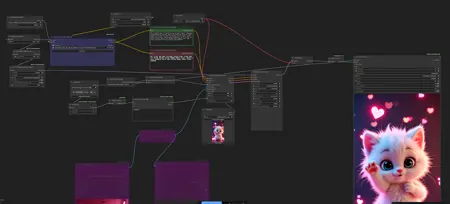

WAN 2.2 S2V Lipsync Workflow with SageAttention + BlockSwap + GGUF (include Upscale and Interpolation)

Details

Download Files

Model description

My TG Channel - https://t.me/StefanFalkokAI

My TG Chat - https://t.me/+y4R5JybDZcFjMjFi

Hi! I introduce my working workflow with Wan 2.2 S2V generation video for ComfyUI.

You need to have Wan 2.2 S2V model

You need to have Wan 2.2 models (https://huggingface.co/Kijai/WanVideo_comfy_fp8_scaled/tree/main/S2V), clip (https://huggingface.co/Kijai/WanVideo_comfy/blob/main/umt5-xxl-enc-bf16.safetensors), audio_encoder (https://huggingface.co/Comfy-Org/Wan_2.2_ComfyUI_Repackaged/tree/main/split_files/audio_encoders) and vae (wan 2.1 vae)

GGUF - https://huggingface.co/QuantStack/Wan2.2-S2V-14B-GGUF/tree/main

Also I have included Lora https://huggingface.co/Kijai/WanVideo_comfy/tree/main/Lightx2v for better and fast generation

I recomend set strength 1.5 for loras to get best results

Also you need to have Sageattention 2.2.0 with Triton (https://huggingface.co/Kijai/PrecompiledWheels/tree/main) and Torch 2.7.0+ for faster generation (https://github.com/pytorch/pytorch/releases), also u need download and install CUDA 12.8 (https://developer.nvidia.com/cuda-12-8-0-download-archive) and VS Code (https://visualstudio.microsoft.com/downloads/)

Instruction how to install Sageattention (1.0.6, how to install sageattention 2.2.0 - rename .whl file to .zip and put folders from .zip to ComfyUI\python_embeded\Lib\site-packages)

just use audio, image, write prompt and enjoy!

Leave comments if you have trouble or you found the problem with workflow