Video Media Toolkit: Streamline Downloads, Frame Extraction, Audio Separation & AI Upscaling for Stable Diffusion Workflows | Utility Tool v6.0

세부 정보

파일 다운로드

이 버전에 대해

모델 설명

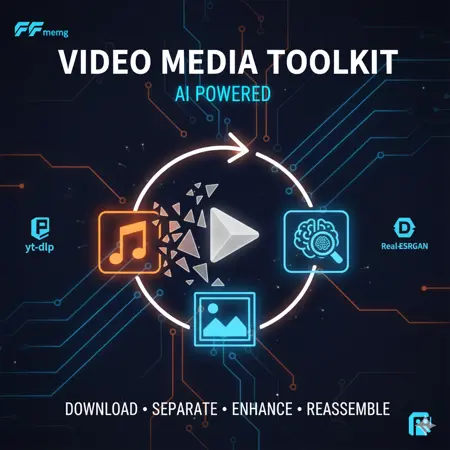

비디오 미디어 툴킷: Stable Diffusion 워크플로우를 위한 다운로드, 프레임 추출, 오디오 분리 및 AI 업스케일링 간소화 | 유틸리티 툴 v6.0

개요

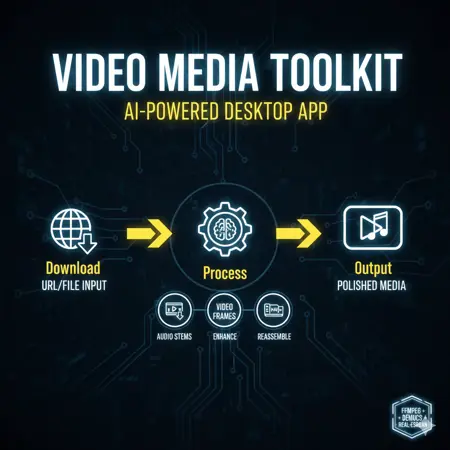

Video Media Toolkit v6는 Stable Diffusion 창작자, 트레이너, 비디오-이미지 변환 애호가를 위한 무료 오픈소스 데스크톱 유틸리티로, AI 아트 파이프라인을 한층 업그레이드합니다. 이 모든 것을 하나로 통합한 Windows 앱은 미디어 입력, 분해, 향상 및 재구성까지 처리하며, LoRA 학습을 위한 YouTube/Reddit 비디오에서 고품질 프레임을 추출하거나 오디오 반응형 생성을 위한 보컬/악기 분리를 수행하거나, ComfyUI 또는 Automatic1111 워크플로우에 사용할 저해상도 자산을 업스케일링하는 데 최적화되어 있습니다.

Flux/Stable Diffusion 미세 조정을 위한 데이터셋을 준비하거나 AnimateDiff 확장용 동적 비디오 입력을 제작하는 경우, 이 도구는 내부적으로 yt-dlp, FFmpeg, Demucs 및 Real-ESRGAN을 사용하여 번거로운 작업을 자동화하여 수 시간을 절약합니다. NVIDIA 환경에서는 GPU 가속을 통해 빠른 처리가 가능합니다.

주요 장점:

배치 다운로드 및 대기열: URL 또는 로컬 파일에서 비디오/오디오를 가져와 MP4/MP3 또는 프레임 시퀀스(JPG/PNG)로 출력하여 데이터셋 준비에 즉시 활용 가능.

AI 기반 분해: 학습용 청정 오디오 스템(보컬, 드럼 등) 또는 프레임 추출 — NSFW/SFW 콘텐츠 커링에 이상적.

향상 및 재구성: 노이즈 제거, 선명도 향상, 업스케일(2x-4x), 안정화를 적용하여 고품질 비디오 출력 생성.

워크플로우 통합: A1111, ComfyUI, Kohya_ss 또는 Hugging Face 데이터셋과 호환되는 형태로 내보내기. 더 이상 수동 FFmpeg 스크립트 필요 없음!

Windows 10/11에서 테스트 완료. Python 3.8+ 필요. 설치 크기 약 500MB(_CUDA 지원 torch 포함).

기능

다운로드 탭: 미디어 소스 및 추출

입력: URL(YouTube, Reddit 미디어, 직접 링크) 또는 로컬 파일.

출력: MP4(향상된 비디오), MP3(오디오), 또는 프레임 폴더(예: SD 학습용 frame_0001.png).

향상 옵션: 해상도(360p-8K), CRF 품질, FPS 제어, 선명도 조정/색상 보정/인터레이스 해제/노이즈 제거.

오디오 옵션: 노이즈 감소, 볼륨 정규화 — 청정 스템 생성에 이상적.

대기열 시스템: 여러 작업 추가, 순차적 처리, 소스 자동 삭제, 사용자 정의 yt-dlp/FFmpeg 인수.

프로 팁: 5분 비디오에서 수 초 내로 1000개 이상의 프레임 추출; Reddit 래퍼 자동 처리.

재구성 탭: 프레임에서 비디오 재생성

입력: 프레임 폴더(예: 다운로드 또는 외부 편집 결과).

옵션: FPS 설정, 오디오 병합, minterpolate(모션 부드럽게), tmix(프레임 블렌딩), deshake, deflicker 적용.

출력: 사용자 정의 FFmpeg 필터를 적용한 MP4 — AnimateDiff 또는 비디오 LoRA용 안정화 클립 내보내기.

사례: 프레임 업스케일링 → 4K 학습 비디오로 재구성.

오디오 탭: Demucs 기반 스템 분리

입력: 다운로드한 MP3/WAV/FLAC.

모델: htdemucs, mdx_extra 등 (GPU/CPU 모드).

출력: 보컬, 베이스, 드럼 등 분리된 트랙을 하위 폴더에 저장 — 오디오 기반 SD 프롬프트에 활용 가능.

모드: 전체 6스템 또는 2스템(보컬 + 악기) — 빠른 리믹싱용.

업스케일 탭: Real-ESRGAN 프레임 향상

입력: 이미지 폴더(예: 추출된 프레임).

확대 배율: 2x/3x/4x — SD용 고해상도 자산 생성.

출력: 일괄 업스케일링된 폴더 — 저해상도 비디오를 4K로 향상하여 모델 학습 품질 향상.

GPU 가속: Torch 기반; CPU로 백업 실행 가능.

추가 유틸리티:

지속적인 출력 루트 폴더 선택.

실시간 로그 + 파일 내보내기(logs/ 디렉터리).

종속성 테스터(FFmpeg, yt-dlp, Demucs).

장시간 작업용 고대비 다크 UI.

설치 및 설정

다운로드: GitHub 저장소에서 ZIP 파일 받기(또는 여기에 첨부).

설치자 실행: video_media_installer.bat 더블 클릭 — PySide6, torch(CUDA 감지 시), Demucs, Real-ESRGAN 등을 자동 설치. pip 업그레이드도 처리.

- 수동 수정: FFmpeg/yt-dlp에 대한 [경고] 발생 시, ffmpeg.org / yt-dlp GitHub에서 다운로드하여 PATH에 추가하거나 하드코딩 경로 사용.

모델 다운로드: 업스케일링을 위해 /models/에 RealESRGAN_x4plus.pth 파일 배치(README에 링크 있음).

실행: launch_video_toolkit_v6.bat 더블 클릭. 최초 실행 시 출력 폴더 설정.

테스트: "종속성 테스트" 버튼 클릭 — 모두 [OK] 상태 확인.

호환성 참고사항:

Windows 중심: 설치 용이성을 위한 Bat 런처; Linux/macOS는 수동 Python 실행.

SD 통합: 프레임은 번호 순서로 내보내기(예: %04d.png) — Kohya 또는 DreamBooth에 직접 임포트 가능.

A1111 확장 아님: 독립형 앱 — 비디오-이미지 파이프라인에는 ControlNet과 함께 사용.

경고: 큰 파일은 8GB 이상 RAM 필요; Demucs는 GPU 권장(CPU는 느림). NSFW 콘텐츠는 소스 정책에 따라 처리.

사용 예시

LoRA 학습 준비: 애니메이션 클립 다운로드 → PNG 프레임 추출 → 4배 업스케일 → Kohya_ss 데이터셋에 사용.

오디오 반응형 아트: 노래 보컬 분리 → "보컬 파형" 프롬프트로 SD 이미지 생성.

비디오 데이터셋: YouTube 영상 50개 일괄 다운로드 → 프레임 + 스템 생성 → 동작 데이터로 Flux 학습.

변경 사항 (v6 핵심 업데이트)

Reddit URL 파싱 개선.

대기열 개선 + 사용자 정의 인수.

가독성 향상된 다크 테마.

Demucs GPU 감지 버그 수정.