QWEN-Anime Official Workflow

下载文件

关于此版本

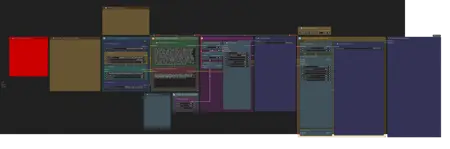

# 🎨 Official ComfyUI Workflow for QWEN-Anime-Beta2 (GGUF)

This is the GGUF-optimized workflow designed specifically for QWEN-Anime-Beta2 GGUF versions (Q4/Q6/Q8/F16). This workflow is tailored for users who want to use the GGUF quantized models for better memory efficiency or CPU inference.

## ⚠️ CRITICAL REQUIREMENT

YOU MUST INSTALL ComfyUI-GGUF FIRST!

This workflow will NOT work without the ComfyUI-GGUF custom node:

- GitHub: https://github.com/city96/ComfyUI-GGUF

- Installation: Via ComfyUI Manager or manual git clone

Without this custom node, GGUF models cannot be loaded!

---

## ✨ Features

### GGUF-Specific:

- ✅ Uses UnetLoaderGGUF node for GGUF model loading

- ✅ Correct folder structure unet/ instead of diffusion_models/)

- ✅ Optimized for GGUF quantized models (Q4/Q6/Q8/F16)

- ✅ Great for limited VRAM or CPU inference setups

### General Features:

- 📋 Step-by-step workflow with clear color-coded groups

- 📦 Integrated GGUF model download links and folder structure

- ⚡ Support for Lightning LoRAs (4-step and 8-step) for fast generation

- 🔼 Optional Hires Fix upscaler with side-by-side comparison

- 📐 Preset resolutions from 512×512 to 2K (2048×1152)

- 💾 Works with limited VRAM (GGUF Q4 = 10GB file!)

---

## 📦 What's Included

- Complete GGUF model loading setup with UnetLoaderGGUF node

- Prompt templates with tips for better results

- Resolution presets (SDXLResolutionPresets) for quick size selection

- Base generation + optional upscaling workflow

- Image comparison tool (rgthree) to compare before/after upscaling

- All necessary nodes with helpful titles and descriptions

- GGUF-specific instructions built directly into the workflow

---

## ⚙️ Requirements

### Essential:

1. ComfyUI (latest version recommended)

2. ComfyUI-GGUF custom node ⚠️ MANDATORY

- https://github.com/city96/ComfyUI-GGUF

### Additional Custom Nodes:

3. rgthree-comfy (for image comparison and group bypasser)

- https://github.com/rgthree/rgthree-comfy

4. comfyui-copilot (for resolution presets)

- https://github.com/hylarucoder/comfyui-copilot

### Files to Download:

- QWEN-Anime-Beta2 GGUF (Q4/Q6/Q8/F16) - Choose one

- Text Encoder (qwen_2.5_vl_7b_fp8_scaled.safetensors)

- VAE (qwen_image_vae.safetensors)

- Optional: Lightning LoRAs for speed boost

All download links are included directly in the workflow!

---

## 🚀 Quick Start

### Step 1: Install ComfyUI-GGUF

```

CRITICAL: This MUST be done first!

https://github.com/city96/ComfyUI-GGUF

```

Install via ComfyUI Manager or:

```bash

cd ComfyUI/custom_nodes/

git clone https://github.com/city96/ComfyUI-GGUF

```

Restart ComfyUI after installation.

### Step 2: Download Files

1. Load the workflow in ComfyUI

2. Read the Model Links note in the workflow

3. Download your chosen GGUF version (Q8 recommended for quality)

4. Download Text Encoder and VAE from provided links

5. Optional: Download Lightning LoRA

### Step 3: Place Files Correctly

⚠️ IMPORTANT: GGUF files go in a DIFFERENT folder!

```

📂 ComfyUI/models/

├── 📂 unet/ ⚠️ GGUF goes HERE!

│ └── qwen-anime-beta2-Q8.gguf

├── 📂 loras/Qwen/

│ └── Qwen-Image-Lightning-8steps-V1.0.safetensors

├── 📂 vae/QWEN/

│ └── qwen_image_vae.safetensors

└── 📂 text_encoders/QWEN/

└── qwen_2.5_vl_7b_fp8_scaled.safetensors

```

Common Mistake: Don't put GGUF in diffusion_models/ folder - it won't work!

### Step 4: Generate

1. Write your positive prompt

2. Write your negative prompt

3. Select resolution preset

4. Click Queue Prompt

5. Enjoy your anime art!

---

## 🎯 Which GGUF Version to Choose?

### Q8 (20.23 GB) - RECOMMENDED ⭐

- Best quality among quantized versions

- Minimal quality loss vs full precision

- Good balance of size and quality

- Recommended for most users

### Q6 (15.63 GB) - Balanced

- Good quality with smaller file size

- Slight quality reduction vs Q8

- Good for storage-limited systems

### Q4 (10.72 GB) - Smallest

- Smallest file size

- Fastest loading

- Noticeable but acceptable quality loss

- Great for very limited storage/VRAM

### F16 (38.07 GB) - Full Precision

- Maximum quality

- Same as original full precision

- Very large file size

- Only if you need absolute best quality

---

## 💡 GGUF Benefits

### Why Use GGUF Instead of Regular Safetensors?

✅ Smaller File Sizes - Q4 is only 10GB vs 38GB!

✅ Lower VRAM Usage - Works on lower-end GPUs

✅ CPU Inference - Can run on CPU if needed

✅ Faster Loading - Smaller files load quicker

✅ Good Quality - Q8 has minimal quality loss

### When to Use GGUF:

- Limited disk space

- Limited VRAM (below 8GB)

- Want faster model loading

- Need CPU inference support

- Storage/bandwidth limited

### When to Use Regular Safetensors Instead:

- Have plenty of VRAM (12GB+)

- Want absolute maximum quality

- Storage space not a concern

- Don't want to install extra custom nodes

---

## 📖 Workflow Guide

The workflow is organized into 5 color-coded groups:

### 📦 Step 1: Load Models (Blue)

- Uses UnetLoaderGGUF node (GGUF-specific!)

- Load Text Encoder with CLIPLoader

- Load VAE with VAELoader

- Optional: Add Lightning LoRA for speed

### ✍️ Step 2: Write Prompts (Green)

- Positive Prompt: Describe what you want

- Negative Prompt: Describe what to avoid

- Tips and templates included in workflow

### 📐 Step 3: Choose Size (Cyan)

- Use Resolution Presets for quick selection

- Tested resolutions: 512×512 to 2K

- All major aspect ratios supported

### 🎨 Step 4: Generate (Purple)

- Base KSampler creates initial image

- Automatic VAE decoding

- Preview and save base version

### 🔼 Step 5: Upscale (Orange - Optional)

- Toggle ON/OFF with Fast Groups Bypasser

- Upscales by 1.5x for better detail

- Second pass refines upscaled image

- Side-by-side comparison with original

---

## ⚙️ Recommended Settings

### Fast Mode (with 8-step LoRA):

- Steps: 8

- CFG: 1.0

- Resolution: 512×512 or 1024×1024

- Upscaler: OFF

- Time: ~30-80 seconds

### Quality Mode (no LoRA):

- Steps: 24-32

- CFG: 3.6-4.0

- Resolution: 1024×1024 or higher

- Upscaler: ON

- Time: ~180-400 seconds

### Ultra Quality (with upscaler):

- Steps: 32 base + 16 upscale

- CFG: 3.6-4.2

- Resolution: Start at 1024×1024, upscale to 1536×1536

- Upscaler: ON with denoise 0.55-0.65

- Time: ~300-600 seconds

---

## 🎨 Prompting Tips

### Good Prompt Structure:

```

anime, [quality tags], [subject], [details], [style], [lighting]

```

### Example Positive Prompt:

```

anime, masterpiece, best quality, 1girl, nekomimi, fluffy cat ears,

long silver hair, golden eyes, playful smirk, witch hat,

casting magic, cozy library, warm lighting, detailed, vibrant colors

```

### Example Negative Prompt:

```

low quality, blurry, distorted, bad anatomy, bad hands,

text, watermark, signature, ugly, mutation, extra limbs

```

---

## 🐛 Troubleshooting

### "UnetLoaderGGUF node not found"

Solution: Install ComfyUI-GGUF custom node!

https://github.com/city96/ComfyUI-GGUF

### "Cannot find GGUF model"

Solution: Make sure file is in models/unet/ folder, NOT diffusion_models/

### "Out of memory" error

Solution:

- Use smaller GGUF version (Q6 or Q4)

- Lower resolution (try 512×512)

- Disable upscaler

- Close other applications

### Images look worse than expected

Solution:

- Try higher GGUF version (Q8 instead of Q4)

- Increase steps (20-32)

- Adjust CFG scale (try 3.0-4.0)

- Add quality tags to prompt

### Workflow loads but generates errors

Solution:

- Verify all custom nodes are installed

- Check all files are in correct folders

- Restart ComfyUI after installing nodes

- Check ComfyUI console for specific errors

---

## 💬 What Makes This Different?

### vs Regular Safetensors Workflow:

- ✅ Uses UnetLoaderGGUF instead of UNETLoader

- ✅ Files go in unet/ folder instead of diffusion_models/

- ✅ Requires ComfyUI-GGUF custom node

- ✅ Smaller file sizes (10-20GB vs 38GB)

- ✅ Better for limited VRAM setups

### vs Basic GGUF Setup:

- ✅ Complete workflow with all steps

- ✅ Built-in upscaler and comparison

- ✅ Resolution presets included

- ✅ All download links provided

- ✅ Instructions built into workflow

---

## 📋 Checklist Before Starting

Before you start generating, make sure:

- [ ] ComfyUI-GGUF custom node is installed

- [ ] rgthree-comfy custom node is installed

- [ ] comfyui-copilot custom node is installed

- [ ] GGUF model file is in models/unet/ folder

- [ ] Text Encoder is in models/text_encoders/QWEN/

- [ ] VAE is in models/vae/QWEN/

- [ ] (Optional) Lightning LoRA in models/loras/Qwen/

- [ ] ComfyUI has been restarted after installing nodes

- [ ] Workflow loads without errors

If all boxes are checked, you're ready to generate! 🎨

---

## 🎯 Perfect For

- Beginners - Step-by-step color-coded workflow

- Limited Storage - Smaller file sizes (Q4 = 10GB!)

- Limited VRAM - Works on lower-end GPUs

- CPU Users - GGUF supports CPU inference

- Bandwidth Limited - Smaller downloads

- Advanced Users - Full control over all settings

---

## 📞 Support

### If you need help:

1. Check the troubleshooting section above

2. Verify all custom nodes are installed

3. Check ComfyUI console for error messages

4. Make sure files are in correct folders

5. Visit the CivitAI model page for updates

### Common Questions:

Q: Do I need the regular safetensors version too?

A: No! This workflow is complete and self-contained for GGUF.

Q: Can I use regular safetensors with this workflow?

A: No, this workflow is GGUF-specific. Use the regular workflow for safetensors.

Q: Which GGUF version is best?

A: Q8 for best quality, Q4 for smallest size. See "Which GGUF Version" section above.

Q: Why can't I find my GGUF file in ComfyUI?

A: Make sure it's in models/unet/ folder, NOT diffusion_models/!

Q: Do I still need VAE and Text Encoder?

A: Yes! GGUF is just the main model. You still need VAE and Text Encoder separately.

---

## 🙏 Credits

Workflow created for: QWEN-Anime-Beta2 GGUF versions

Base Model: qwen-image-edit 2509

Custom Dataset: Hand-created by model author

ComfyUI-GGUF: city96 (https://github.com/city96/ComfyUI-GGUF)

rgthree-comfy: rgthree

comfyui-copilot: hylarucoder

---

## 📝 Version Info

Workflow Version: 1.0

Compatible With: QWEN-Anime-Beta2 GGUF (all versions)

Created: November 2025

Last Updated: November 2025

---

Download the workflow, install ComfyUI-GGUF, and start creating amazing anime art! 🎨

Perfect for users who want smaller file sizes and better memory efficiency while maintaining great quality!

---

## 🔗 Useful Links

- QWEN-Anime-Beta2 Model: (CivitAI page)

- ComfyUI-GGUF: https://github.com/city96/ComfyUI-GGUF

- rgthree-comfy: https://github.com/rgthree/rgthree-comfy

- comfyui-copilot: https://github.com/hylarucoder/comfyui-copilot

- Text Encoder: https://huggingface.co/Comfy-Org/Qwen-Image_ComfyUI/resolve/main/split_files/text_encoders/qwen_2.5_vl_7b_fp8_scaled.safetensors

- Lightning LoRAs: /model/2048940 & /model/2046883

模型描述

🎨 QWEN-Anime-Beta1 官方 ComfyUI 工作流

这是专为 QWEN-Anime-Beta1 检查点优化的工作流,包含快速上手并生成高质量动漫图像所需的一切。

✨ 功能:

- 带清晰彩色分组的逐步工作流

- 集成模型下载链接与文件夹结构指南

- 支持 Lightning LoRAs(4步与8步)实现快速生成

- 可选的高分辨率修复上采样器,支持并排对比

- 预设分辨率:从 512×512 到 2K(2048×1152)

- 适用于 8GB 显存

📦 包含内容:

- 完整的模型加载设置

- 带提示的提示词模板

- 分辨率预设(SDXLResolutionPresets)

- 基础生成 + 可选上采样

- 图像对比工具(rgthree)

- 所有必要节点,附带清晰标题与说明

⚙️ 要求:

- ComfyUI

- 自定义节点:rgthree-comfy、comfyui-copilot(工作流中包含安装链接)

- QWEN-Anime-Beta1 检查点 + VAE + 文本编码器(工作流中提供下载链接)

- 可选: Lightning LoRAs 用于加速

🚀 快速开始:

1. 在 ComfyUI 中加载工作流

2. 按照“模型链接”说明下载所需文件

3. 将文件放入正确文件夹(工作流中展示结构)

4. 编写你的提示词

5. 选择分辨率

6. 生成!

适合新手与高级用户。所有模型链接、安装说明和技巧均已内置在工作流中。