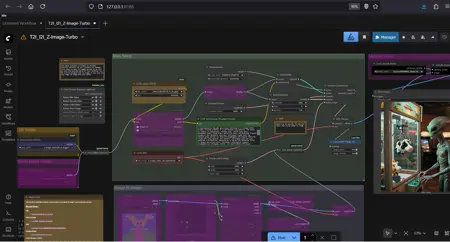

Simple Z-Image-Turbo Diffusion/GGUF Workflow (T2I, I2I & Upscaling)

세부 정보

파일 다운로드

모델 설명

이 작업 흐름은 Z-Image-Turbo 모델을 위해 설계된 종합적인 작업 흐름입니다. GGUF 지원을 통해 표준 사용 및 저 VRAM 환경을 모두 처리하도록 구성되었습니다.

작업 흐름 기능:

이중 모델 지원: 제공된 스위치를 사용하여 표준 .safetensors 모델과 양자화된 GGUF 버전을 쉽게 전환할 수 있습니다.

모드 전환: "빠른 그룹 바이패스" 설정을 통해 재접선 없이 텍스트-이미지 및 이미지-이미지 모드를 빠르게 전환할 수 있습니다.

내장 업스케일링: 최종 출력의 선명도를 높이기 위해 LexicaRRDBNet을 사용한 업스케일링 단계가 포함되어 있습니다.

해상도 관리: ResolutionMaster를 사용하여 화면 비율이 올바르게 유지되도록 합니다.

필요한 모델:

이 작업 흐름을 완전히 사용하려면 다음 파일이 필요합니다(링크는 작업 흐름 내부 메모에 제공됨):

UNET: z_image_turbo_bf16.safetensors 또는 z_image_turbo-Q5_K_M.gguf

CLIP: Qwen3-4B-UD-Q5_K_XL.gguf (이 모델은 Qwen 텍스트 인코더를 사용합니다).

VAE: z_image_turbo_vae.safetensors

업스케일러: 2xLexicaRRDBNet_Sharp.pth (또는 선호하는 업스케일러).

사용 방법:

모델 선택: 왼쪽 끝의 스위치를 사용하여 GGUF 로더 또는 표준 UNET 로더 중 하나를 선택하세요.

모드 선택: "빠른 그룹 바이패스"(다양한 색상의 버튼 목록)를 사용하여 "텍스트-이미지" 또는 "이미지-이미지" 모드를 활성화하세요.

- 중요: 이미지-이미지 모드를 사용하는 경우, 샘플러(노드 61)의 디노이즈 강도를 원하는 대로 조정하세요.

프롬프트 및 큐잉: CLIP 텍스트 인코더 노드에 텍스트를 입력하고 실행하세요.

요구 사항:

- ComfyUI Manager (rgthree, KJNodes, GGUF와 같은 누락된 사용자 정의 노드 설치용)