illustrious TxT2ImG with Controlnet, Detailer Deamon, Detailers and IPAdapters

详情

下载文件

模型描述

🗒️ 我全新的 ComfyUI 绚丽工作流

嗨,我想分享一下我最近一直在使用的绚丽工作流。

这是我对上一个工作流的升级版,你可以在这里找到:/model/1830913/illustrious-txt2img-with-multiple-detailers-workflow。

主要功能亮点

多重细节增强器:人物、面部、眼睛、手部、胸部和乳头。每个均可通过旁路开关独立启用或禁用,以便精确控制需要优化的部分。

可选 ControlNet 系统:可开启/关闭整个 ControlNet 组,或在需要精确结构或姿态控制时激活特定网络。

IPAdapter 集成:支持同时使用多个参考图像,每个图像的权重均可调节,以实现风格、光影和角色一致性。

LoRA 组加载器:预加载整套 LoRA 及其权重,可一键开启或关闭。

细节增强守护者(Detailer Deamon):可锐化纹理、增强精细特征或平滑细节,且不影响采样器或提示词。

双采样器工作流(Two-KSampler):灵活的双采样器设置,在不增加工作流复杂度的前提下提供额外的形态控制。

完整元数据保存:自动保存模型、提示词、设置及所用 LoRA,非常适合分享和上传至 CivitAI。

自动保存:在保存路径中加入

%date,ComfyUI 会自动将输出整理到按日期命名的文件夹中。新手友好旁路架构:从简单模式开始,逐步开启高级功能——非常适合循序渐进地学习,避免信息过载。

SAM + Ultralytics 分割支持:高质量的分割模型确保细节增强器能准确识别面部、手部、身体等目标区域。

免责声明

我并非专家,实际上我是个新手。这个工作流是从我上一个工作流演化而来,而那个工作流又是受 DigitalPastel 和 Mittoshuras 的优秀作品启发,我将它们拼接整合,直到能正常运行。

我参考的工作流包括:

⚠️ 因此,如果你在运行此工作流时遇到问题,我可能无法像我希望的那样提供支持,甚至可能完全帮不上忙。

我会在下方提供一个简短的分步指南,但未来一两周内,我计划撰写一篇完整文章详细解析这个工作流。在此期间,如果你遇到问题,ChatGPT 是你的好朋友(我就是这么做的)——把你的错误信息复制粘贴进去,坚持尝试。

或者,如果你看到这个工作流觉得“这是什么鬼东西”,我强烈建议你从 DigitalPastel 的 Smooth-workflow 开始,它是我入门时的绝佳起点。

⚡ 快速入门

以最友好的方式快速开始生成图像:

1. 将工作流加载进 ComfyUI。

隐藏链接,这个工作流是堆满线团的“鸟巢”。

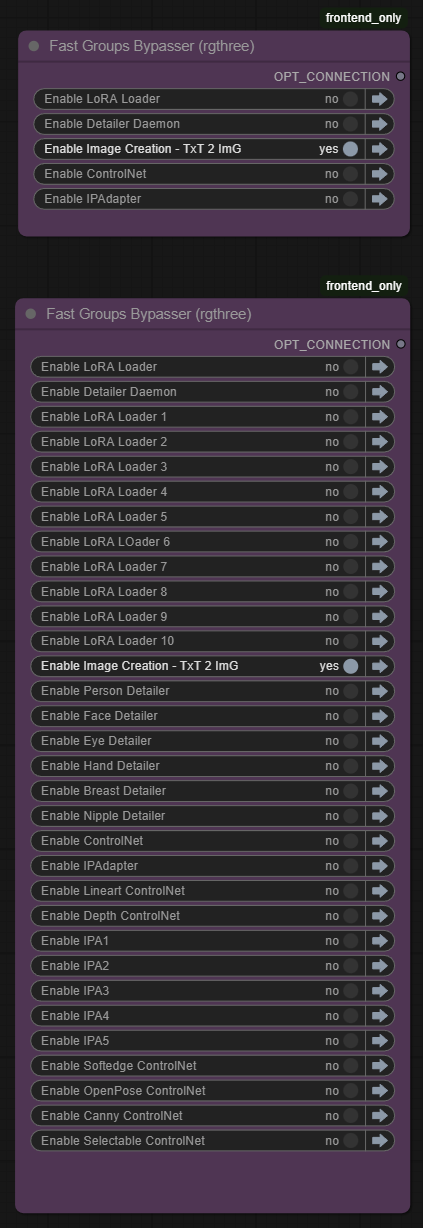

2. 仅开启图像生成部分,其余全部关闭。

保持界面简洁、新手友好,或仅用于测试你的设备。使用主旁路开关确保除 Txt2Img 外所有功能均关闭。

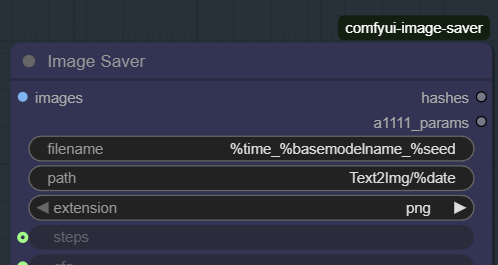

3. 设置图像保存路径

在图像保存节点中,设置图像名称、路径和保存格式。

目前,图像将保存在名为 Text2Img 的文件夹中,并按生成日期创建子文件夹,例如:Text2Img/2025-12-01/filename.png

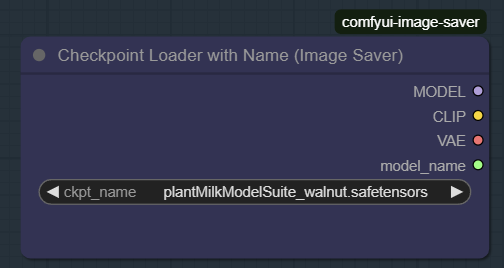

4. 选择模型检查点。

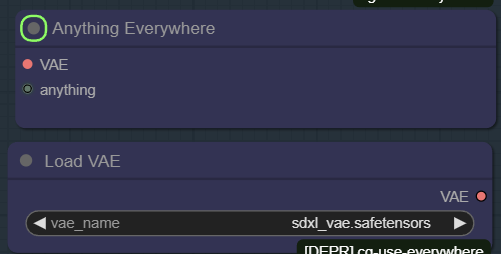

5. 加载 VAE(仅在需要时)

仅在确实需要时才加载 VAE。方法是将“加载 VAE”节点连接到“VAE 任意处”节点。(默认情况下,此节点已连接至检查点加载器)

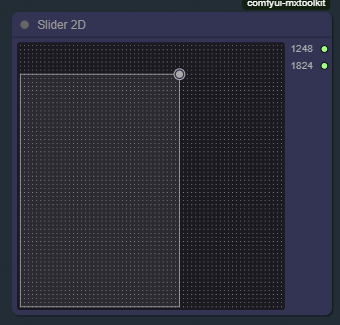

6. 使用 2D 滑块设置图像尺寸。

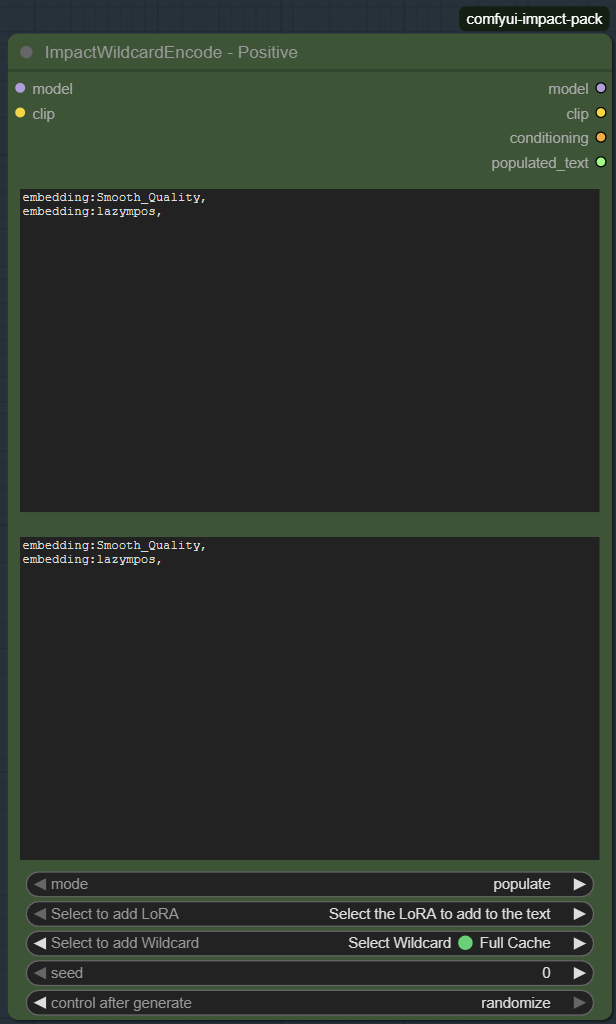

7. 编写正向提示词。

使用绿色正向提示词节点。首次测试时可保持简单,例如:

“一位女性的详细肖像,柔和光线,写实风格”

或若想更进一步:

正向节点分为两个区域。

上方框:输入你的正向提示词。

使用“选择添加 LoRA”插入 LoRA。

格式示例:

embedding:SmoothNoob_Quality<lora:Smooth_Booster:0.5>

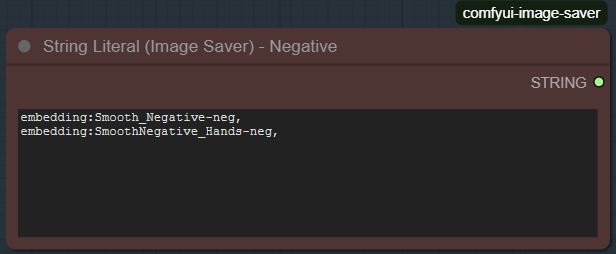

8. 添加基础负向提示词。

使用红色负向提示词节点,方法同正向提示词,可包含 embedding,或开始时保持简单,例如:

“模糊、解剖结构扭曲、多余肢体、手部糟糕”

9. 点击 Queue Prompt。

恭喜,你的第一个输出已完成。

10. 想要更多控制?

逐个开启新模块:

细节增强器

ControlNets

IPAdapter

LoRA 加载组

本工作流的设计允许你逐步升级,而不会破坏任何功能——或者更准确地说,即使出错,也容易回退。

以下是每个模块的功能说明,更重要的是,何时应考虑使用它们。

⭐ 细节增强器 —— 它们的作用与使用时机

本工作流包含六个专用细节增强器,旨在清理和优化图像的特定区域。每个增强器均可通过旁路开关独立启用或禁用,仅激活你需要的部分。

可以把细节增强器看作你的“后期艺术家”,专门处理模型常出问题的区域。

👤 人物细节增强器

人物细节增强器一次性优化整个角色,清理大体轮廓、衣物边缘、头发轮廓和主要解剖特征,为更专业的增强器打下基础。

何时使用?

在以下情况使用人物细节增强器:

• 整体角色看起来模糊或松散

• 衣物或身体边缘需要锐化

• 头发显得一团糟或缺乏结构

• 基础渲染构图良好但细节不足

• 你希望在面部/手部/眼睛进一步优化前获得干净的基础

这是一个绝佳的“第一遍”增强器——尤其适合肖像或全身照。

🙂 面部细节增强器

该增强器专注于整个面部区域:轮廓、鼻子、嘴唇、下颌线、表情、脸颊细节等。

何时使用?

在以下情况使用面部细节增强器:

• 面部模糊或缺乏清晰度

• 表情清晰度至关重要

• 希望提升皮肤细节,但避免生硬伪影

• 正在制作肖像风格图像

• 基础模型常导致面部不一致

如果角色是画面重点,几乎总是值得开启。

👁️ 眼睛细节增强器

眼睛是大多数图像的核心,模型常将其模糊或扭曲。此增强器仅隔离并优化眼睛和眼睑,以实现最大清晰度。

何时使用?

在以下情况使用眼睛细节增强器:

• 眼睛模糊、不对称或不匹配

• 虹膜细节不够突出

• 睫毛变成模糊阴影

• 希望增强眼神表现力或强度

• 生成特写或肖像时

此增强器能以极低的计算开销显著提升视觉质量。

此处还包含一个检查点加载器,我发现混合不同检查点处理眼睛时,常能产生惊艳的效果。

✋ 手部细节增强器

手部可能是 AI 图像生成的“最终 Boss”。此增强器通过优化遮罩和锐化手指形状来控制手部表现。

何时使用?

在以下情况使用手部细节增强器:

• 手指模糊、粘连或不清晰

• 手部在画面中突出或姿势复杂

• 涉及珠宝、纹身或道具

• 希望获得更真实的阴影和结构

• 特写镜头中手部靠近面部

只要画面中出现手部——这个增强器就是你的最佳伙伴。

⭐ 胸部细节增强器

优化胸部区域的形状、阴影和轮廓,修正变形或建模缺陷,尤其在高分辨率或风格化角色设计中效果显著。

何时使用?

在以下情况使用胸部细节增强器:

• 制作 NSFW、 glamour 或暴露服装内容

• 衣物布料出现异常褶皱

• 胸部区域出现拉伸、扭曲或奇怪阴影

• 希望获得更平滑的曲线和更自然的高光

非必需,但对人物构图非常有帮助。

🔸 乳头细节增强器

该增强器高度依赖分割模型,可能需要实验。它仅优化乳头区域,可修正常见纹理或位置问题,但效果因模型而异。

何时使用?

在以下情况使用乳头细节增强器:

• 生成 NSFW 内容

• 模型默认渲染的乳头不一致

• 需要更锐利的细节或更真实的纹理

• 使用的检查点在此区域表现不佳

若你的模型已能良好渲染乳头,可关闭此功能;否则建议尝试。

🎛️ 细节增强器通用建议

• 不必同时启用所有增强器——仅激活与当前图像相关的部分

• 肖像:启用面部 + 眼睛可获得最大提升

• 全身照:人物 + 手部通常已足够

• NSFW:胸部 + 乳头 + 人物组合效果最强

• 若图像看起来过度处理,可关闭一两个增强器

细节增强器的威力在于策略性使用,而非持续开启。

⭐ 细节守护者(Detailer Deamon)—— 智能细节的秘诀

细节守护者(来自 Impact Pack)通过调整sigma 曲线(即采样器在去噪过程中遵循的噪声调度)来提升图像优化效果。通过塑造这条曲线,守护者让你精确控制细节何时出现。

这使你能够:

• 在去噪早期加入结构细节

• 在后期加入精细锐利的细节,以获得更干净的最终效果

• 减少过度锐化或模糊纹理

• 改善 SDXL、SD1.5、FLUX 等模型的整体纹理处理

可将其视为细节时间控制器:不再仅依赖采样器设置,守护者精细调节细节流动,使图像看起来更平滑、更清晰、更具意图性。

何时使用?

开启细节守护者当:

• 图像感觉太柔和或缺乏清晰度

• 细节增强器产生的结果过于生硬或过度锐化

• 希望获得更平滑、一致的优化,而不必调整复杂的采样器设置

• 在高分辨率下工作,希望更好地控制精细纹理

• 希望获得更“精致”的效果,而无需增加步数或使用外部放大器

非必需,但一旦你习惯这种更干净的细节流动,就很难再回到以前。

如何安装:

下载 Impact Pack:

https://github.com/ltdrdata/ComfyUI-Impact-Pack

将其放入:

ComfyUI/custom_nodes/

重启 ComfyUI,细节守护者即可准备就绪,优化你的渲染结果。

⭐ ControlNets —— 安装与可选系统的理解

ControlNets 是你工作流的“结构助手”。它们让你通过外部信息(如姿态、边缘、深度、线稿或涂鸦)引导模型。与其指望模型准确理解你的提示,不如让 ControlNets 给它一个温柔(或坚定)的推动。

本工作流包含一个可选 ControlNet 系统,让一切井然有序、易于管理:

• 可一键开启/关闭整个 ControlNet 组

• 仅启用你需要的单个网络

• 防止你的图谱变成一团 ControlNet “意大利面怪兽”

SDXL ControlNets 下载地址:

• https://huggingface.co/lllyasviel

• https://huggingface.co/InstantX/

• https://huggingface.co/thibaud

• https://civitai.com — 搜索 “SDXL ControlNet”

放置位置:

ComfyUI/models/controlnet/

安装后,它们将自动出现在每个 ControlNet Loader 的下拉菜单中。

可选系统工作方式

- 主蓝色框 → 启用或禁用整个 ControlNet 模块

- 黄色框 → 开启或关闭单个 ControlNet

- 所有内容井然有序,不会横跨半个屏幕

从简单开始,仅在项目需要时再开启额外的网络。

⭐ 何时使用 ControlNets?

当你遇到以下情况时,启用 ControlNet:

• 你希望获得_特定姿势_,而不愿与随机性搏斗

• 你有一张参考草图或涂鸦,希望模型能遵循它

• 你需要更清晰的结构、笔直的线条、明确的形状和一致的轮廓

• 你的图像总是偏离你原本构想的布局

• 你希望利用深度或边缘信息来引导光影效果

• 你在处理角色一致性,希望角色保持模型统一

• 你希望获得更多控制,而无需编写冗长的提示词

它们是可选的,但一旦你熟悉后,将变得极其强大。

配合可选系统,你可以轻松按需开启或关闭它们。

⭐ IPAdapter — 是什么以及如何安装

IPAdapter 是你工作流中的视觉参考解释器。它允许你将一张图像输入到管道中,引导模型匹配其风格、构图、光影或角色身份,而不会压倒你的文字提示。

与强制结构的 ControlNets 不同,IPAdapter 更像一种温和的影响:

“让它看起来_更像这样_一点。”

本工作流包含多个 IPAdapter 插槽,可同时融合多个参考图像,每个都可单独调节权重以实现精细控制。

SDXL 版本 IPAdapter 下载地址:

• https://huggingface.co/h94/IP-Adapter/tree/main/sdxl

下载适用于 SDXL 的 .safetensors 文件。

所需 CLIP Vision 模型下载地址:

• https://huggingface.co/laion/CLIP-ViT-H-14-laion2B-s32B-b79K

放置位置:

ComfyUI/models/ipadapter/

ComfyUI/models/clip_vision/

正确放置后,它们将自动出现在 IPAdapter 节点中。

⭐ 何时使用 IPAdapter?

当你希望图像遵循参考图的视觉线索,而非仅依赖文字提示时,启用 IPAdapter。

特别适用于:

• 你想匹配角色的面部、发型或轮廓

• 你希望在多张图像中保持角色身份的一致性

• 你偏好特定的光影风格或配色方案

• 仅靠提示词难以实现你想要的效果

• 你想融合多种影响(例如:一张图的风格 + 另一张图的光影)

• 你在进行角色扮演、肖像绘制或任何需要可识别相似性的创作

当你需要引导但不需要严格的 ControlNet 结构时,IPAdapter 非常出色——它影响的是图像的整体“感觉”,而非强制精确形状。

如果你只需要风格或氛围匹配,IPAdapter 往往就足够了。

如果你需要姿势或精确结构,请将其与 ControlNet 结合使用。

⭐ LoRA 加载器组 — 快速使用多个 LoRA 的最佳方式

LoRA 加载器组允许你预加载整套 LoRA,并一键开启或关闭,无需在正向提示中添加杂乱内容。当你需要快速、一致地切换风格、角色身份或主题外观时,它们是理想之选。

无需每次手动输入类似 <lora:thinghere:0.8> 的 LoRA 权重,这些加载器让你可以:

• 一次性预加载多个 LoRA

• 在一处统一调整权重

• 通过旁路开关整体开启或关闭整个组

• 保持主提示词简洁清晰

每个组还包含一个便捷的字符串框,用于存储与这些 LoRA 关联的触发词、标签或角色名称,方便快速复制粘贴到提示中。

在复杂工作流中,当提示词过于杂乱成为实际问题时,这套系统尤其有用。

⭐ 何时使用 LoRA 加载器组?

当你遇到以下情况时,使用 LoRA 加载器组:

• 你频繁在不同风格间切换(动漫、写实、电影感、绘画风)

• 你拥有多个角色 LoRA,希望快速替换

• 你正在试验主题包(例如:“赛博朋克包”、“奇幻盔甲包”)

• 你希望在多次渲染中保持一致的 LoRA 权重

• 你想避免提示词被冗长的 LoRA 语法污染

• 你在运行批量生成,且需要不同的 LoRA 配置

在本工作流这类以模块化开关为核心设计理念的流程中,它们尤其强大。

如果你只是偶尔使用一个 LoRA,直接写入正向提示即可。

但如果 LoRA 是你生成流程中的常客,加载器组能节省时间、减少不一致,并保持一切井然有序。