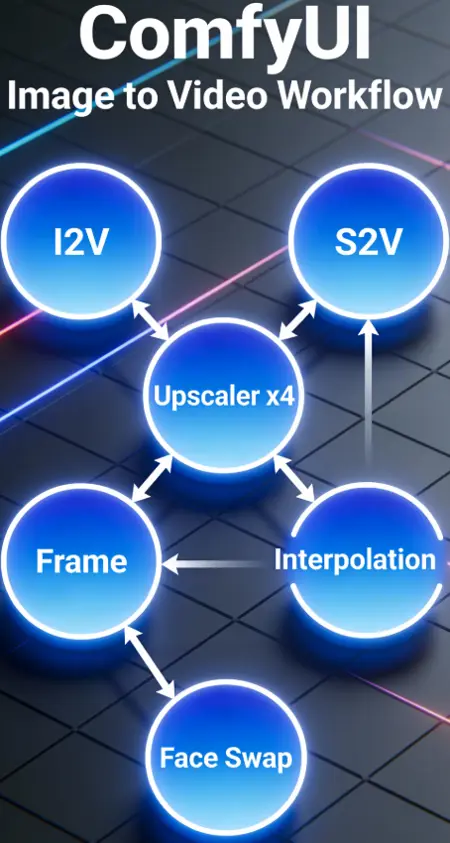

Wan2.2 AIO Workflow (I2V - S2V - Upscaler - Frame Interpolation - Faceswap)

Details

Download Files

Model description

First, all credits to thesharque588 for the base of this workflow !

AIO Workflow for Long video with scenario and automation.

I hope you'll be indulgent with this worklfow (I started ComfyUI from scratch and alone in August) !

The goal of this workflow is to gather everything I need in one place and to be able to chain them together. And I think it's good enough to be shared.

This workflow includes:

Scenario control by Index

Select duration of each Index individually

Auto prompt translator

Color Match

FaceSwap (ReActor Faceswap)

Frame Interpolation (RIFE-VIF)

S2V with MMaudio (No audio source needed)

Upscalers x2-x4 (Upscaler Tensorrt(x4) + Video Upscaler(x2))

Group controls by running groups individually

How this thing works?

First important thing! You have to run groups using the "GroupExecutor" nodes, this will launch only the selected group. This is designed so that all your groups can remain active without loading the models of standby groups. If you run a generation using the "Execute" button in ComfyUI, it will load the MMAudio models in parallel at the beginning of the I2V generation, and you will have less VRAM available for I2V. Sometimes, one of the "GroupExecutor" node will stop to work (still trying to find why), just replace it be a new one or another one from the workflow.

Each part of the process is separated into 4 groups: Image to Video, Sound to Video, Upscalers, Go to 72 FPS (Frame Interpolation).

Each part of the process is separated into 4 groups: Image to Video, Sound to Video, Upscalers, Go to 72 FPS (Frame Interpolation).

I2V

Step 1: I2V Enhancement - Choose the enhancements you want for your video.

Select source Image by choosing 0 or 1 on the "Select Source Image":

0 = Load an individual image

1 = Load an image from folder and process the number of images set (this is for AFK and let the workflow running, it will loop and restart with another image in the folder path after each generation - Don't forget to set your folder path). Still need improvements, working on it.

Step 2: Preparation - Set the parameters of the video

There you gonna set the batch size, the total step counts (Each Index is 1 step count, if your step count is 3 you will use 3 index, from index 0 to index 2).

Resize your image on the largest size (Not using the same image resolution everytime so it's intended to be an automation, if you want more controls feel free to change the node).

Choose every Index duration individually with the sliders (sliders are set to the same frames value as WanImageToVideo, increasing by 4 and can be changed with another INT value node but need to be connected to Any Switch Index hidden behind the negative prompt box).

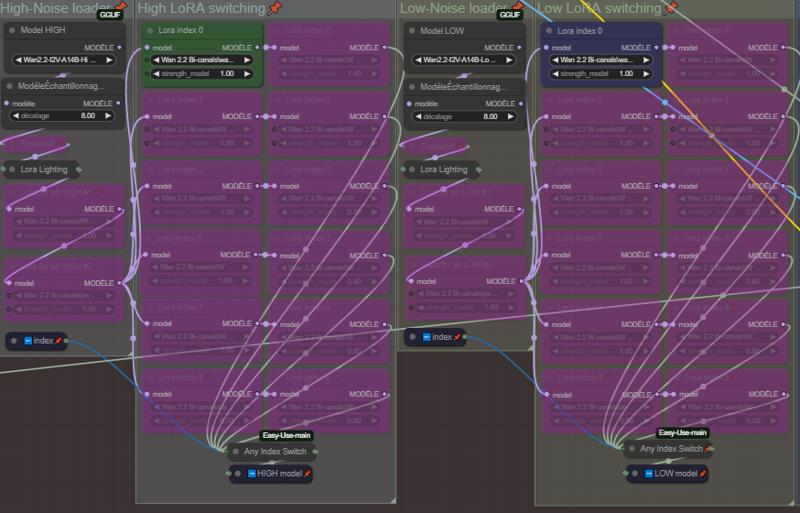

Step 3: Loaders - Set the your models and LoRAs

Choose your models (GGUFs) on High and Low Noise, your Lightning LoRAs and your FusionX LoRAs (Lightning and FusionX LoRAs are not mandatory, if you don't use them just deactivate the nodes).

LoRAs in the High-Noise loader and Low-Noise loader groups are generals LoRAs applied on each index.

Choose your LoRAs on each Index. This part is set for 5 index (starting from index 0, feel free to add or remove them. If you add some, connect them to the AnySwitchIndex under each column).

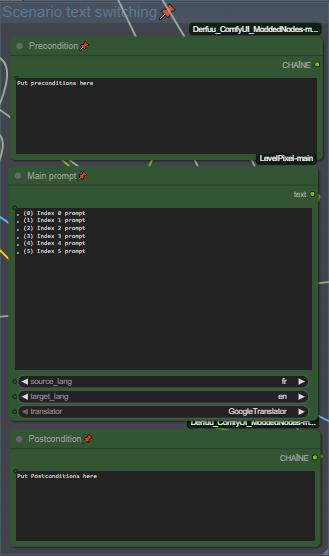

Step 4: Prompts - Set your scenario

🚨🚨🚨🚨 The prompt has an integrated translator if your not good with English 🚨🚨🚨🚨

Set your Pre and Post conditions and start to prompt your scenario for each index. Index separation in prompt is made by a line break, I use ", (0)", ", (1)" separations to have a better overview of my scenarios (there is many other way to do, feel free to change the separation mode if need, hidden behind the goup nodes).

🚨🚨 Don't forget to set the source and target language for translation 🚨🚨

Step 5: FaceSwap - Set the parameters for Face Swapping

Wan2.2 is known to have many LoRAs that cause face drifts/changes, so I added ReActor Faceswap to compensate the face drift of some LoRAs. The settings are good like this but feel free to change them to experiment. (Available in ComfyUI Manager, models will be automatically downloaded).

Choose your image source for the swapping:

0 = Load a source image

1 = Use start image as source

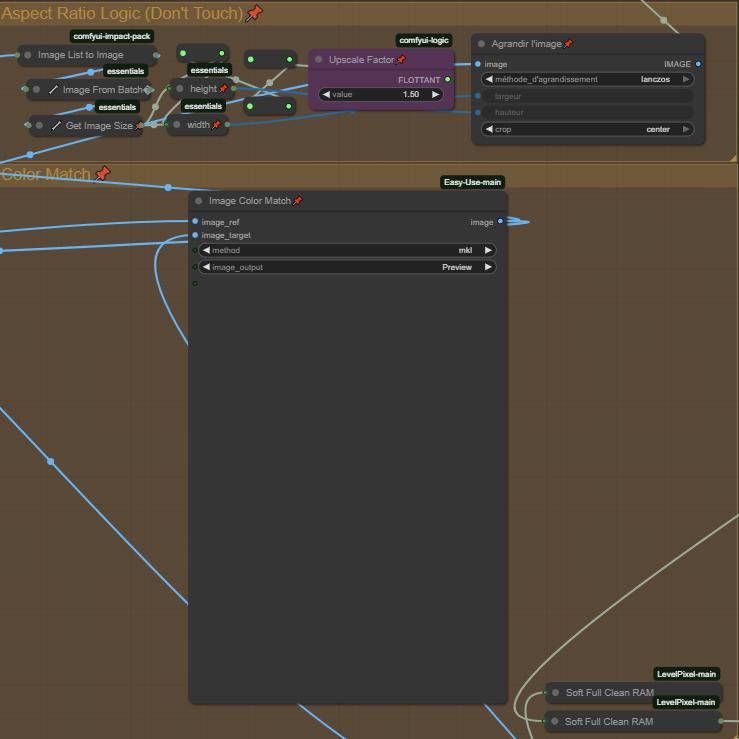

Step 6: Aspect Ratio Logic + Color Match

Aspect Ratio Logic is a simple logical zoom to improve a bit the video, change the Upscale Factor to change the zoom.

Image Color Match is intended to keep the same colors as the image source and match them, can't be disabled, even if you bypass it, it will send nothing to the next node. If you encounter some color problems you can change the color "Method" or delete the node. This will be fix in a futur updated version.

(Important)

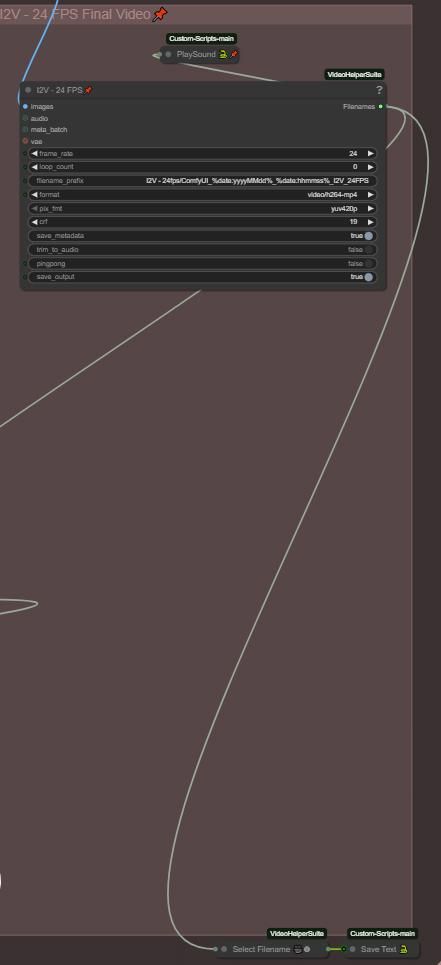

Step 7: Final video and chain fonction

The folder path of every final video is saved in a text file (Save Text node), the purpose of this is to chain the generation, when your done with I2V you can load the last video generated with the filename saved in the text file and loaded by the next group at start. If you want to change the text file name be sure it's matching with the others files name in "Save Text and Load Text" nodes !

The final video is set to 24Fps for MMaudio for a better compatibility (MMaudio is trained at 24fps), if you don't intend to generate Audio on your video, you can bring it down to 16fps.

You also have a "Playsound" who is gonna notify you when the workflow is done with the generation. Not mandatory, you can delete them if you don't want them.

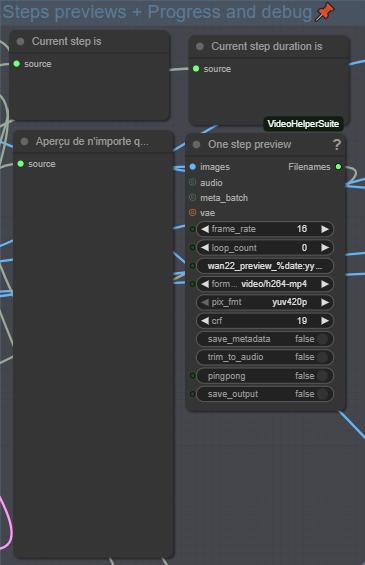

Step 8: Monitoring process and debug

This part will show you the current index working, his duration in total frames, his prompt and a preview for each index. This allows you to monitor your generation and make the necessary adjustments.

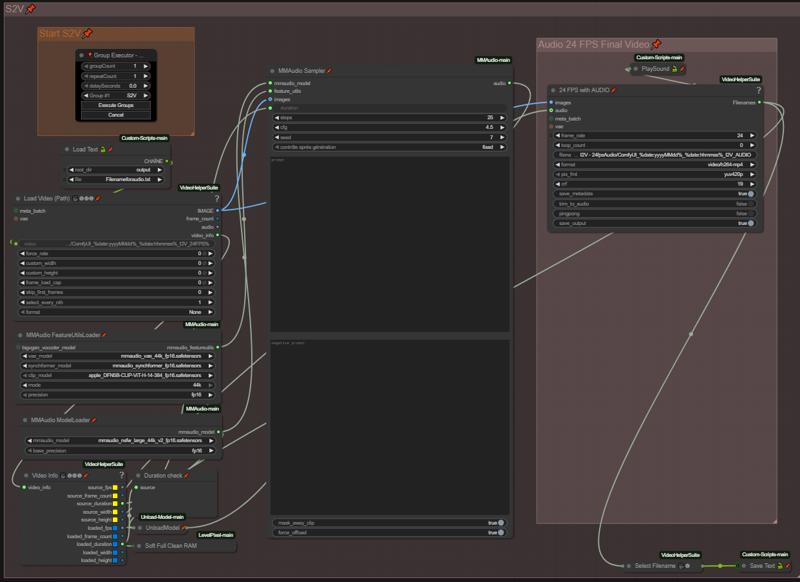

S2V

There you gonna prompt your audio, I recommand to use NSFW MMaudio, way better results than the basic model. Everything is automated, so when your positive and negative prompt is done, just run the group. It will automatically load the previous I2V generated through the "Load Text" node and add sound to it.

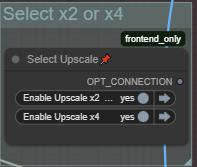

Upscalers x2-x4

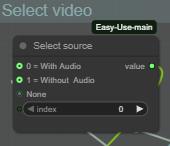

Step 1: Choose your source video (With or without audio)

There you are just choosing if your are uspcaling the audio video from MMaudio group or the video from I2V group without audio.

There you are choosing wich Uscale group is working.

NOTE:

The x2 Upscaler is way more slow than the x4 because it's not on TensorRT, if someone have a better solution to quick Upscale in x2, I'm open to suggestions.

The x4 Upscaler works with TensorRT, if you already have Upscale models in .\models\uspcale_models they will automatically be imported. Only works with x4 upscalers, tried to import and convert x2 models but doesn't work.

Then don't forget to change the parameters of the Upscalers if needed and run the group with the "GroupExecutor" node.

Frame Interpolation (Go to 72Fps)

Same logic as the previous group.

Step 1: Choose your source video (With or without uspcale or audio)

Depending on the last generation group.

0 = Without Upscale (Loading the MMaudio 24fps audio video)

1 = With Upscale (Loading from the Upscaled video)

2 = No Audio & No Upscale (Loading from the video from I2V group)

Step 2: Adjustments

The final video is set to 72Fps only to keep the sound synchronized if you used the Audio group. If you don't use the Audio group, you can bring it down to 60 fps if needed.

And like the previous group, don't forget to change the Interpolator paramaters if needed.

Ressources

Custom Nodes should be found by the ComfyUI Manager, if some are missing, thanks to notify me.

I'm using this workflow on a RTX 5080 16GB and 64GB RAM

GGUF models and VAE:

https://huggingface.co/QuantStack/Wan2.2-T2V-A14B-GGUF/tree/main

NSFW ClipVision:

https://huggingface.co/ricecake/wan21NSFWClipVisionH_v10/tree/main

Umt5 xxl encoder GGUF:

https://huggingface.co/city96/umt5-xxl-encoder-gguf/tree/main

NSFW MMaudio:

https://huggingface.co/phazei/NSFW_MMaudio (download and rename it to "mmaudio_nsfw_large_44k_v2_fp16.safetensors")

Lightx2v LoRAs:

https://huggingface.co/lightx2v/Wan2.2-Lightning/tree/main/Wan2.2-I2V-A14B-4steps-lora-rank64-Seko-V1

https://huggingface.co/lightx2v/Wan2.2-Lightning/tree/main/Wan2.2-T2V-A14B-4steps-lora-250928

FusionX LoRA:

https://huggingface.co/vrgamedevgirl84/Wan14BT2VFusioniX/tree/main/FusionX_LoRa

This workflow is under constant optimisation, expect to see a V2 at some point. I hope i didn't forget something, otherwise I'll ad it. Feel free to ask questions if needed (use my discord link on my profil, it's easier for everyone).

_Thank you for using this ComfyUI workflow. Please use it responsibly and respectfully.

This workflow was created for creative, technical, and personal experimentation — not for harm, harassment, or any form of abuse.

_