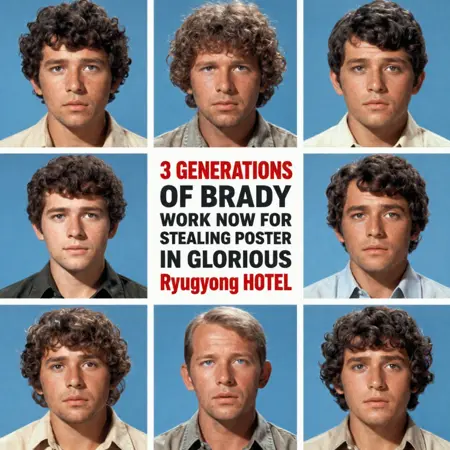

Very Brady Style - location fun and ZiT AI Toolkit training experiment comps

Details

Download Files

Model description

Here’s the story…

…of Very Brady ZiT training experimentation. Maybe dataset abuse is a more appropriate term.

Apologies for the massive size of V1 Alice- rank plays into style. Hammering training parameters makes it even more so.

So, I started training a model of the Brady house when Z Image was released, and I starting mucking with AI Toolkit for local training around the same time. Naturally, I immediately abused some settings that shouldn’t work and/or did not really know what they were. Differential guidance is a gamechanger for me, as are massive batch sizes. These are some experiments and I am really messing with settings, so I am sharing. I always wished more did! Still haven’t gotten the damn house in full though!

Tags for all versions:

Master trigger (optional)- V3ryBr4dy_style

Generally, the master trigger loses accuracy when recreating sets, otherwise, it enhances flexibility.

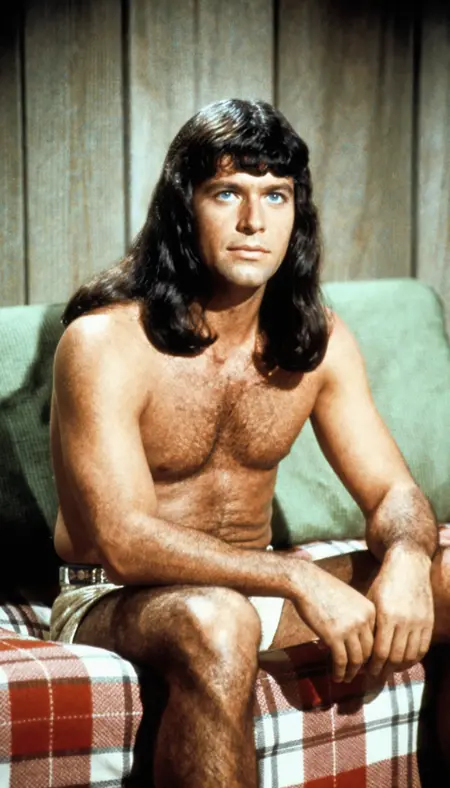

Other tags/locations to use [suggestions to recreate show settings in brackets]- kitchen [orange countertops, dark wood upper cabinets, green lower doors, steel double wall oven], den [wood panel walls, plaid futon couches with dark green back/upper pillows], living room, yard, boys bedroom [blue bunk beds, blue bedspreads, wood panel walls], girls bedroom [white twin bedframes, pink bedspreads, floral print wallpaper], master suite, staircase/floating staircase [colorful rectangular art on the wall]

Sort of works- home office, front of house

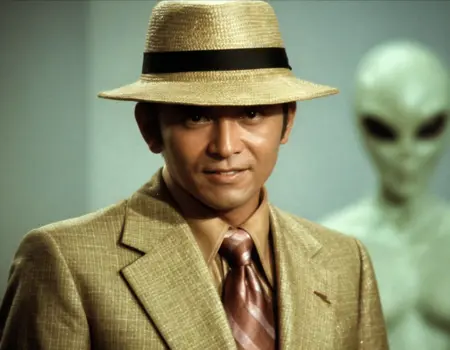

V1 Alice- The dreamiest grainy analog sitcom stills- Brady style

This is the classic look of a late 60s sitcom as it was broadcast, maybe even a little earlier in the 60s. Can be a little dark, can also be goofy slapstick stuff.

Recommended strength- start around .7-.8

Weirdness- prone to grids patterns. So much plaid in this data set!

Relevant training parameters

63 images, training resolution 512, batch size 20, steps 400, though this LoRA is the epoch at step 240. I am running on a 4090 and was able to dump almost all transformer and text encoder layers to CPU RAM to pull off the batch size. Nice!

I digress… differential guidance scale 5 (this is the key!), learning rate 0.001, optimizer- adafactor, noise- balanced, rank 128 (huge but part of the look!)