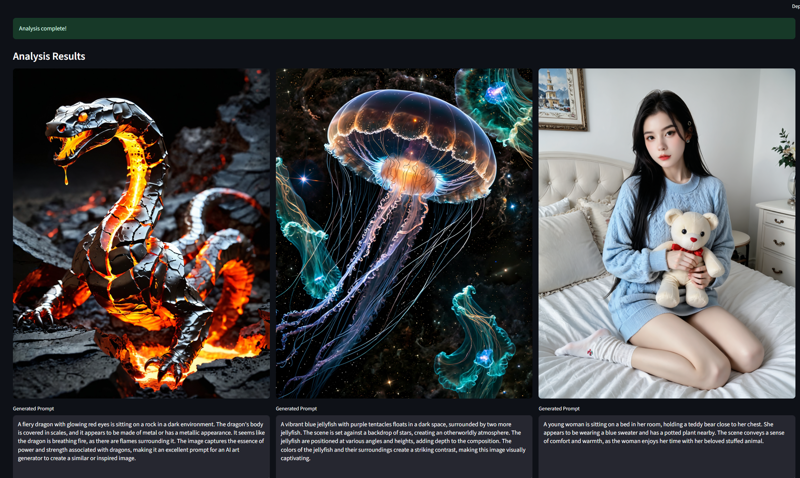

Uncensored Portable Ai Prompt Assistant for Wan, SDXL, Flux.1, and more

詳細

ファイルをダウンロード

モデル説明

Portable AI Prompt Assistant、私はその目的のために構築した無料でオープンソースのデスクトップアプリケーションです。これは、ローカルマシン上で完全に動作するシンプルなツールで、プライベートで柔軟な環境を提供し、クリエイティブなワークフローを強化します。

なぜこのツールを作ったのか?

オンラインサービスは優れていますが、より多くのコントロール、プライバシー、柔軟性を提供するツールが欲しかりました。私は次の機能を持つアプリケーションが必要でした:

イメージをサードパーティのサーバーに送信することなく、ローカルで動作すること。

すでにOllamaおよびLM Studioで利用している強力なオープンソースモデルと連携できること。

異なるモデルの出力を横並びで比較できること。

多種多様なAIアートモデル向けに高品質なプロンプトを生成するために特化できること。

シンプルなプロンプトを、センサー制限のない画像用に生成できること。

Image-to-Prompt AI Assistant は、そのビジョンの成果物です。

あらゆるモデルのためのプロンプト生成の強力なツール

主要な設計目標の一つは、特定のエコシステムに閉じ込められないツールを作ることでした。このアプリが生成するプロンプトは、広範なテキスト→イメージモデルに微調整して使用できます。

カスタムのシステムプロンプトを使用することで、AIに次のような特定形式のプロンプトを生成するよう指示できます:

SDXL & SD 1.5/2.1: キーワードとネガティブプロンプトを含む、コンマ区切りの詳細なプロンプトを作成。

Stable Diffusion 3(およびFlux.1): これらのモデルが持つ最新でより洗練された自然言語理解を活用するプロンプトを生成。

DALL-E 3: 会話形式で文ベースのプロンプトを作成。

Midjourney: モードや構図に焦点を当てた芸術的なプロンプトを生成。

Wan2.1: 参照画像から動画を作成したり、T2V用にテキストを最適化。

「シネマティックなSDXLプロンプトを作成」や「シンプルなアニメ風プロンプトを生成」など、お気に入りのシステムプロンプトを保存し、ワンクリックで切り替えられます。

(出力を比較したり、ターゲットモデルに最適なシステムプロンプトを設定できます。)

コア機能

これは単なる基本的なインターフェースではなく、スムーズで強力なユーザー体験を目的に設計された豊富な機能を備えています。

ローカル優先、プライバシー最重視: ローカルのOllamaまたはLM Studioサーバーと連携。イメージとプロンプトは一切あなたのマシン外に出ません。

マルチモデル比較: 複数のビジョンモデルを選択し、それぞれからの応答を同時に取得。どのAIが最適な説明を提供するか確認できます!

高度なモデル管理(Ollama): 応答後、VRAMを解放するためにモデルを手動または自動でメモリからアンロード。

イメージのみ分析: 特定の質問がなくても、イメージをアップロードして「分析」をクリックするだけで、即座に説明が得られます。

ユーザー完全制御: 「生成を停止」ボタンで、長時間の応答をいつでも中断できます。

会話履歴: セッション全体を閲覧し、.txtまたは.jsonファイルとしてエクスポートして記録できます。

カスタマイズ可能なワークフロー: 無制限にカスタムシステムプロンプトを保存・再利用し、AIの出力をあなたのニーズに完全に合わせられます。

簡単なセットアップ

このアプリケーションはPythonとStreamlitで構築されており、セットアップは簡単です。

前提条件:

Windows 10 または 11。

Ollama または LM Studio(推奨) がインストール・動作中であること。

vision対応モデル(例:llava、Gemma 3など)がダウンロード・ロード済みであること。

🚀 インストール手順

圧縮ファイルをダウンロードし、内容を抽出。

AiPromptAssistant のセットアップファイルを開く。

インストールを開始するには「Install」をクリック。

4. 次のリンクから LM Studio をダウンロード・インストール:https://lmstudio.ai/

⚙️ アプリの使い方

インストール後、以下の手順に従ってください:

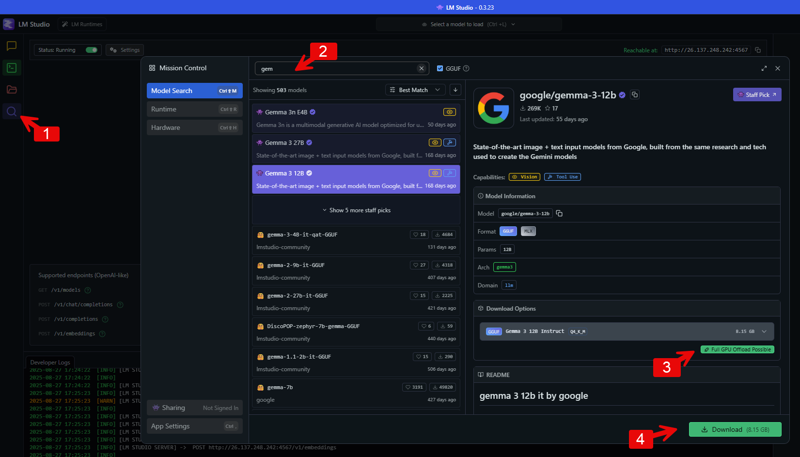

ステップ1:LM Studioでビジョン対応モデルをダウンロード

モデル検索へ移動: LM Studioを開き、左側のメニューにある検索アイコン(虫眼鏡)をクリックしてモデル検索ページへ。

モデルを検索: 上部の検索バーにダウンロードしたいモデル名を入力。画像処理対応モデルを探すには「vision」と入力。画像はGoogleの「gemma」モデル系列を検索した例を示しています。検索結果には複数のバージョンが表示されます。

モデルを選択してダウンロード: 「Vision」タグでビジョン対応と明示されているモデルを探し、検索結果からクリック。右側にモデルの詳細が表示されます。「Download Options」から適切なバージョンを選択し、「Download」ボタンをクリックしてPCに保存。

私が使用しているセンサー制限のないモデル:

私が使用しているセンサー制限のないモデル:

https://model.lmstudio.ai/download/concedo/llama-joycaption-beta-one-hf-llava-mmproj-ggufhttps://model.lmstudio.ai/download/bartowski/mlabonne_gemma-3-27b-it-abliterated-GGUF

https://model.lmstudio.ai/download/mlabonne/gemma-3-12b-it-abliterated-GGUF

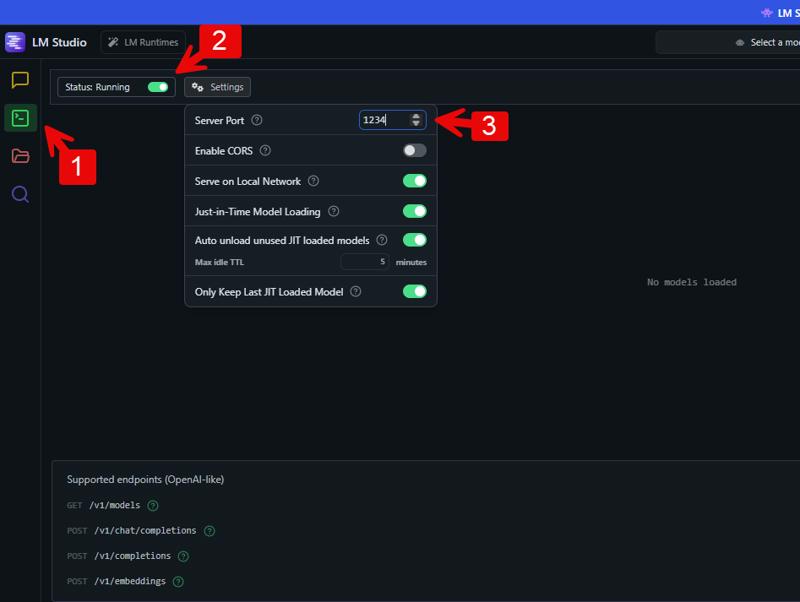

ステップ2:LM StudioでAPIサーバーを有効化

パワーユーザーまたは開発者ビューを有効化: ローカルサーバー設定にアクセスするには、まず表示モードを切り替えます。LM Studioアプリの最下部にある「Power User」または「Developer」をクリック。

ローカルサーバータブを開く: LM Studioの左側メニューで、<_>アイコンをクリックしてローカルサーバー設定を開く。

サーバーを起動: 画面の上部に「Status」のトグルスイッチがあります。「Stopped」から「Running」に切り替え、ローカルAPIサーバーを起動。

サーバーポートを設定: サーバー設定でAPIのポート番号を指定できます。例ではポート「1234」が設定されています。このデフォルトを使用するか、他の空いているポートに変更可能。

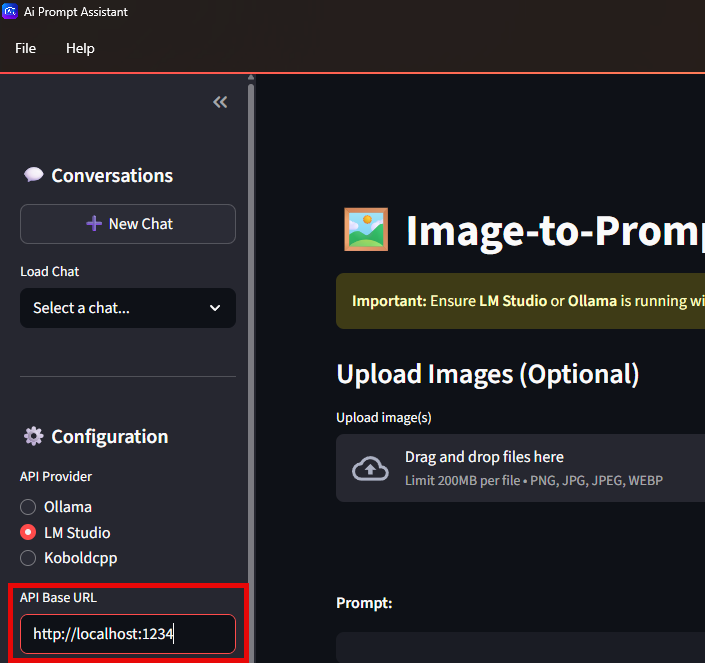

ステップ3:アプリケーションをLM Studio APIに接続

AI Prompt Assistantを開く。

APIエンドポイントを設定: アプリケーションの設定で「Configuration」セクションを見つけ、API Base URLを入力。このURLはローカルマシン(localhost)と、LM Studioで設定したポートを指す必要があります。

API Base URLを入力: 「API Base URL」フィールドに、http://localhost: を入力し、LM Studioで設定したポート番号を続けます。例:ポート1234の場合、http://localhost:1234 と入力。これにより、アプリはLM Studioで実行中のモデルと通信する場所を認識します。

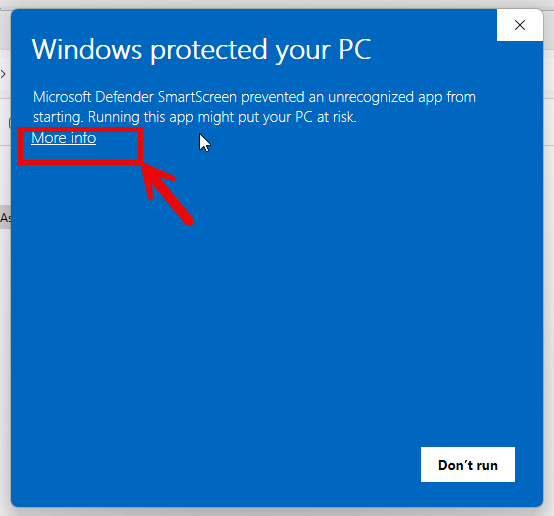

⚠️ 重要なお知らせ

プログラムをダウンロード・インストールすると、いくつかのセキュリティ警告が表示されることがあります。これはパッケージに.exeファイルが含まれているためであり、公式アプリストア以外または知名度の低い開発者からダウンロードしたアプリでは一般的な現象です。

したがって、心配する必要はありません—これはウイルスを含んでいるという意味ではありません。インターネットからダウンロードしたファイルと同様、インストール前に信頼できるアンチウイルスでスキャンすることをお勧めします。その後は警告を無視してください。

.exeアプリのインストールに不安がある場合は、代わりにオープンソースのPython版をご利用ください。ただし、これは追加のセットアップステップが必要で、いくつかの追加機能が含まれていないことにご注意ください。こちらからアクセスできます:

👉 オープンソースPython版

更新履歴

V1.3: Files API連携により、GoogleのGeminiモデル向けに動画アップロードおよび分析機能を追加。

V1.1: 選択したフォルダ内のすべての画像を一括分析する「Bulk Analysis」タブを追加。フォルダパスを入力し、生成されたプロンプトを同じディレクトリにテキストファイルとして保存可能。

V1.2: GoogleのGemini Flashモデル(1.5、2.0、2.5)をサポート追加。