Wan2.2-Animate_FreeLength-DEEPFAKER

Details

Download Files

Model description

Overview

Wan2.2 Animate represents the pinnacle of V2V.

Using my mid-range setup (RTX 5060 Ti 16GB, 64GB RAM), I was able to create high-quality videos for extended periods, so I definitely want to share that workflow

2025.9.26 Addendum

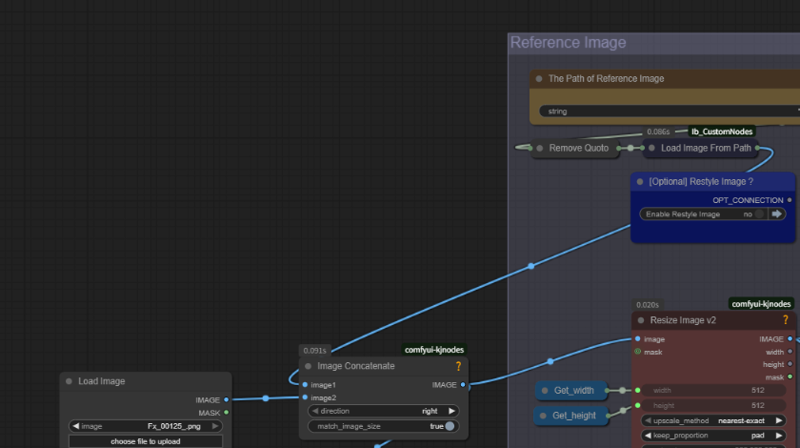

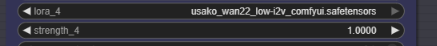

The reference image appears to work even when composited from two images. If a back shot is also needed, connect them as shown in the diagram.

Usage:

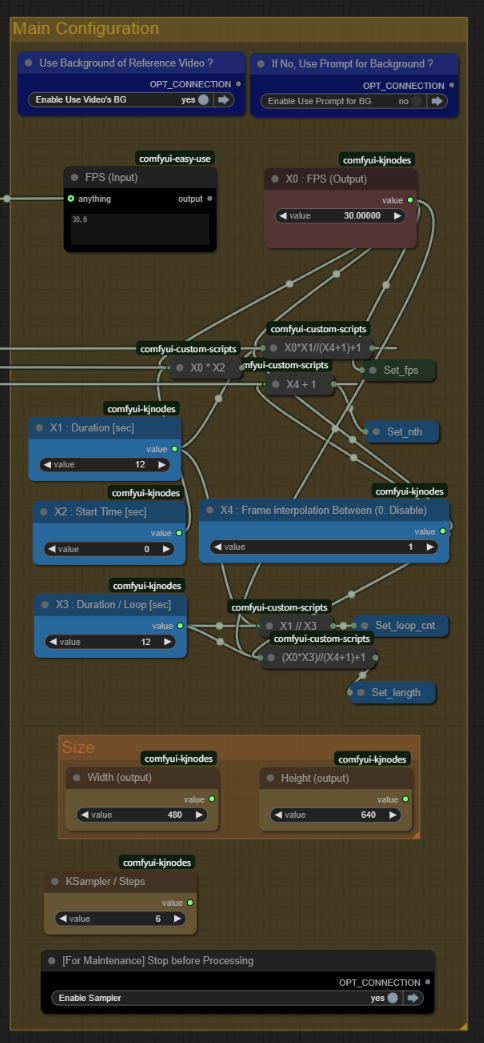

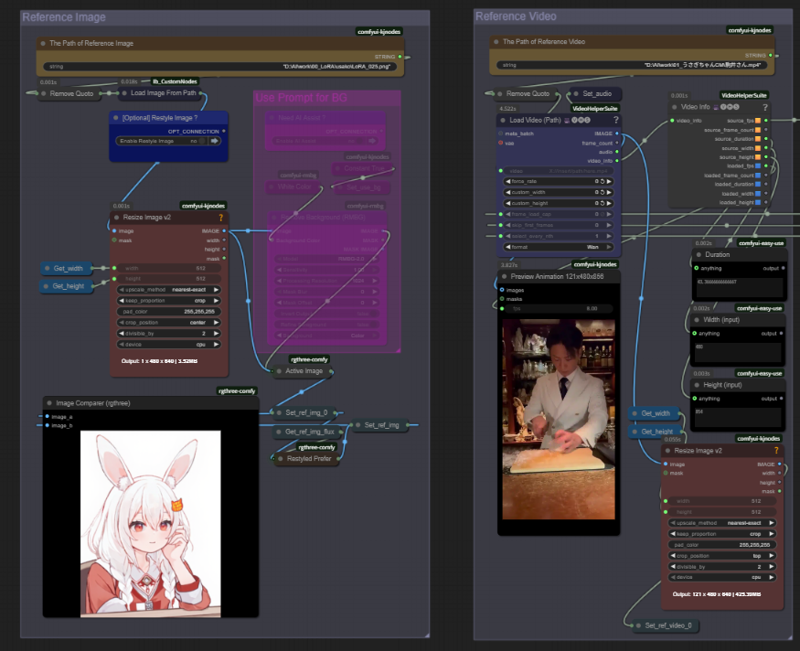

As with my previous works, you only need to be mindful of the parameters in “Main Configuration”.

1. The blue section configures the background. It determines whether to use the video's background or generate it via prompt.

2. The light blue section sets the output video's duration (length, start time in seconds, and processing interval in seconds). Assuming the same fps as the input video, this setting automatically determines the frame count and number of loops.

3. There are two ways to create long videos (e.g., 10 seconds):

- Set X1=X3=10 and complete it in one loop, or

- Set X1=10, X3=2 and complete it in five loops.

Depending on the size, splitting is recommended to avoid OOM. I tested creating a 60-second video at 480×832 resolution. While it didn't cause an OOM, color saturation occurred toward the end.

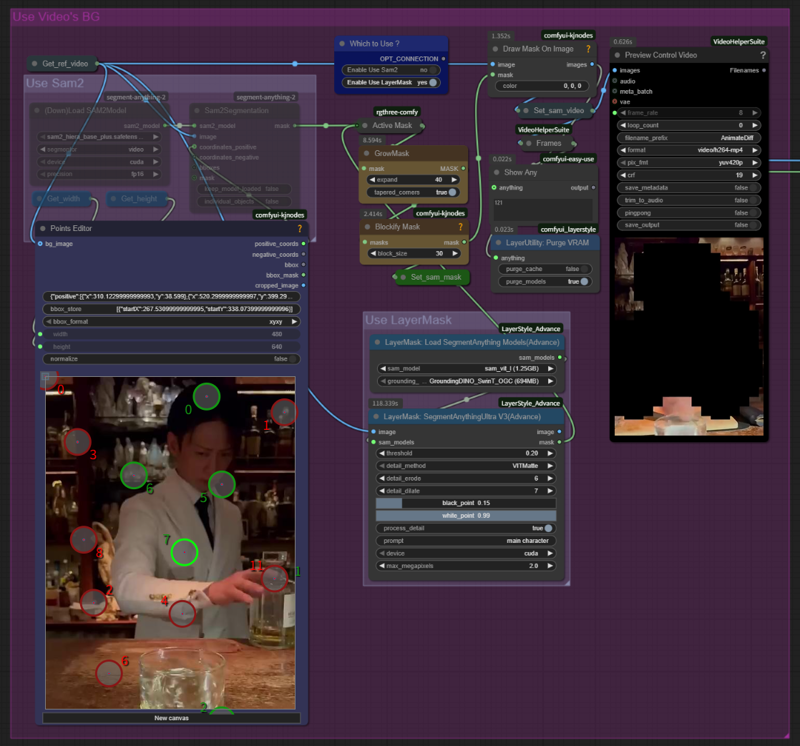

4. When using video backgrounds, choose one of the masking methods (SAM2 or LayerMask). LayerMask is superior but takes slightly longer. For single-loop cases, we recommend using SAM2 (Points Editor).

5. Finally, decide on the size. 1280×720 offers high quality but may cause OOM under certain conditions. However, even at lower quality (832×480), you might get satisfactory results in some cases. In my case, applying my custom character LoRA (Wan2.2 i2v fp16) yielded excellent results.

6. When setting the size, you can also rotate a vertical video horizontally. In that case, don't forget to adjust settings like using the Resize node with padding to prevent image distortion.

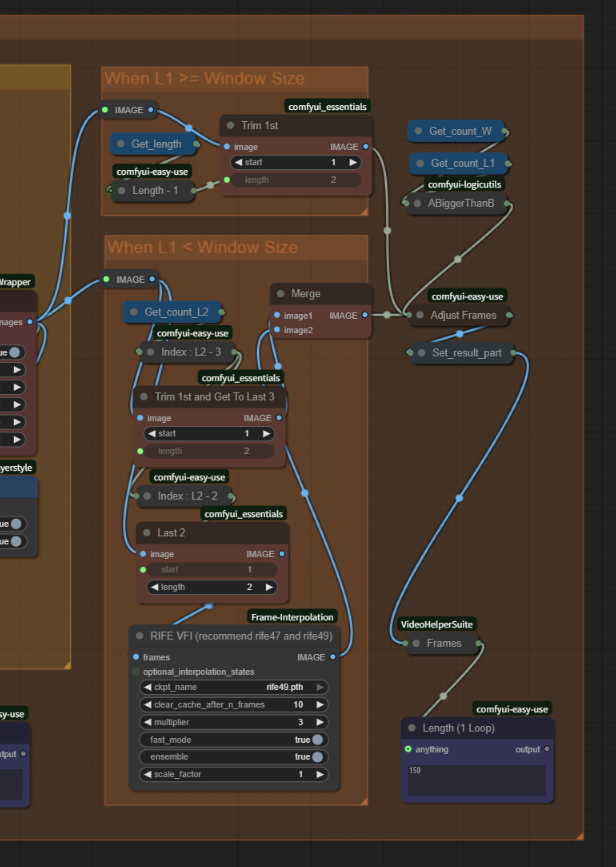

7. Regardless of your settings, Animate's specification creates a number of frames equal to WindowSize × N. This means that in cases where multiple loops are executed, the animation will not play correctly. Therefore, we implemented processing to trim these frames after they pass through the Sampler.

That's all for the explanation. I must say, I've created something truly remarkable.

Just between us, I tried making an NSF* during development and ended up getting sidetracked (lol)

Thanks.